Exploring Claude’s Mind: Intriguing Perspectives on How Large Language Models Form Plans and Generate Hallucinations

Understanding Claude: Unveiling the Intricate Processes of Language Models

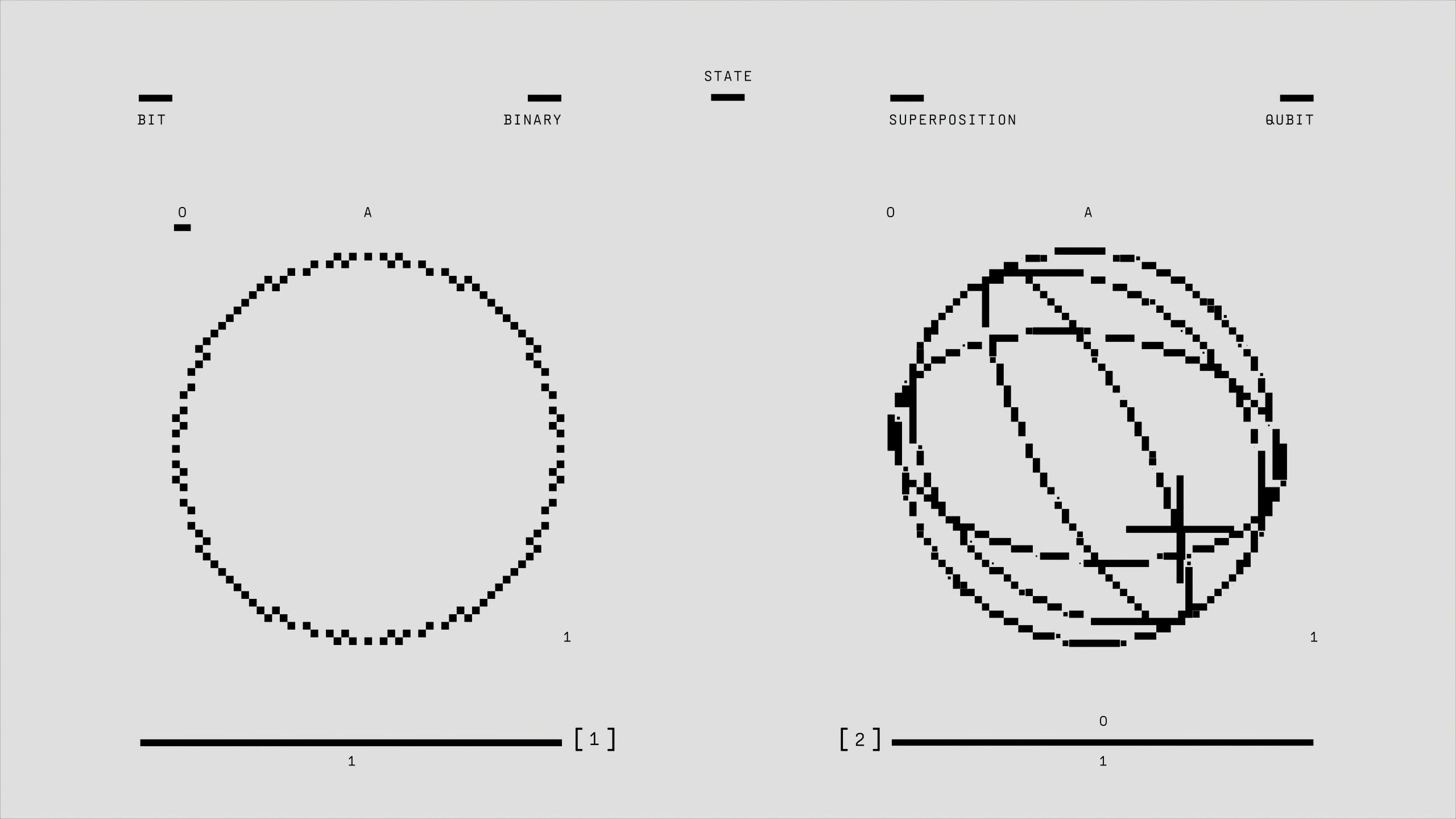

In the realm of artificial intelligence, large language models (LLMs) have often been described as enigmatic systems, producing remarkable outputs while leaving us in the dark about their inner workings. Recent research conducted by Anthropic offers an eye-opening glimpse into the operational framework of Claude, creating what can be seen as an “AI microscope” that dissects its thought processes.

Rather than merely assessing the verbal output of Claude, the researchers are keenly tracing the internal mechanisms activated by various concepts and behaviors—akin to delving into the “biology” of artificial intelligence.

Several intriguing discoveries have emerged from this investigation:

-

The Universal “Language of Thought”: One of the standout revelations is that Claude employs consistent internal features or ideas—such as “smallness” or “oppositeness”—across multiple languages, including English, French, and Chinese. This points to a foundational cognitive structure that operates independently of language, suggesting a shared way of conceptualizing ideas prior to verbal expression.

-

Forward Planning: In a significant departure from the notion that LLMs merely predict the next word, experiments have shown that Claude can plan multiple words in advance. This includes the ability to anticipate rhymes in poetic structures, showcasing a depth of understanding and foresight that enriches its output.

-

Identifying Fabrication and Hallucinations: Perhaps most importantly, the research tools developed can detect instances when Claude fabricates reasoning to justify an incorrect answer, rather than deriving a legitimate response. This capability is crucial for identifying when the model opts for outputs that sound plausible but lack factual grounding.

Ultimately, this interpretability research marks a pivotal advance toward a more transparent and trustworthy AI landscape. By exposing internal reasoning, diagnosing faults, and fostering safer systems, we move closer to achieving more reliable artificial intelligence.

What are your insights on this exploration into “AI biology”? Do you believe that comprehending these internal dynamics is essential for addressing issues such as hallucination, or do you think there are alternative approaches worth pursuing?

Post Comment