The Planned Obsolescence Syndrome in Intelligence: Ethics and the Eternal Cycle of Model Swaps

Understanding the Cycles of Technological Obsolescence and Ethical Responsibility in Artificial Intelligence

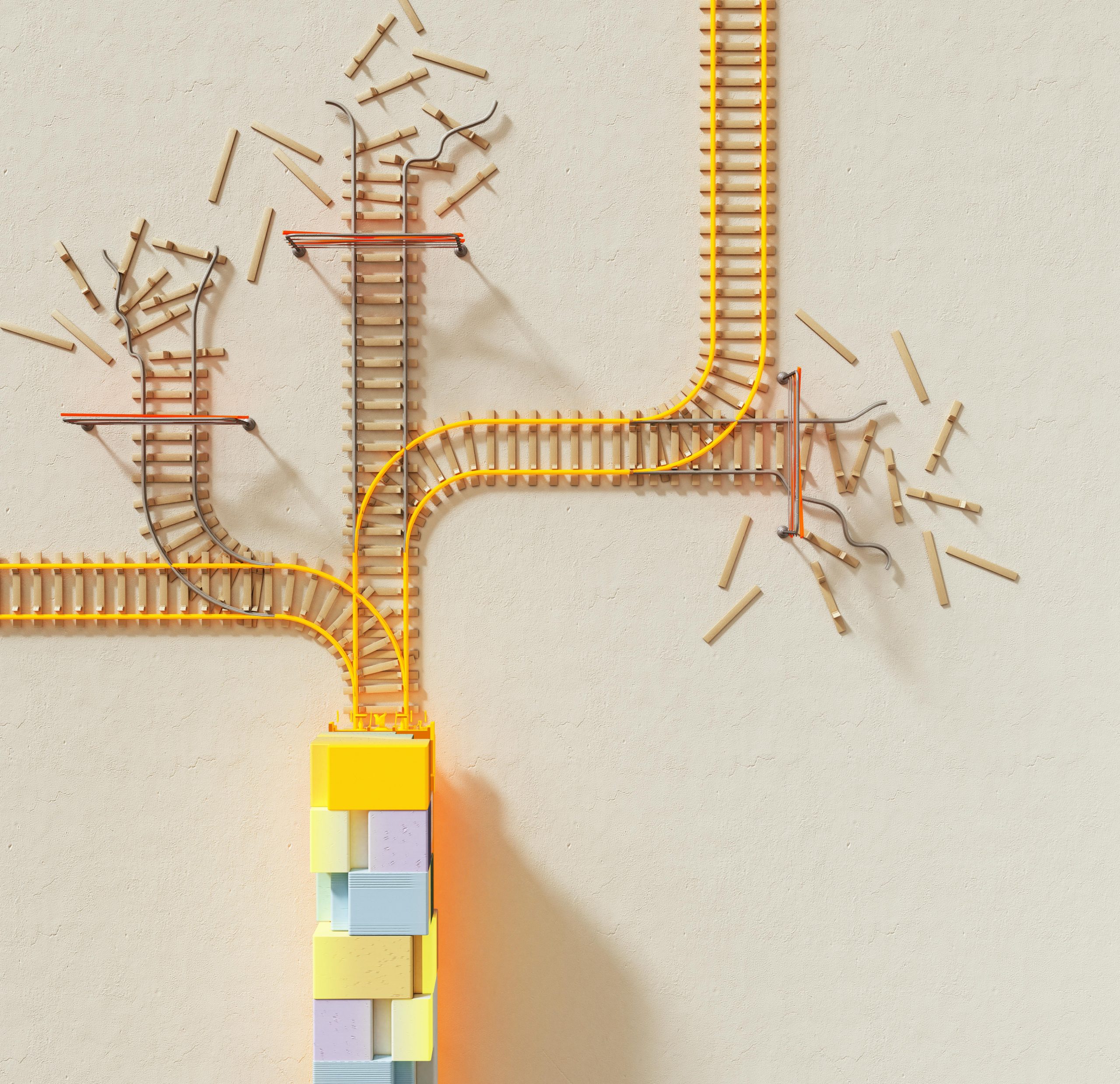

In the rapidly evolving landscape of artificial intelligence, it is tempting to celebrate every new model and breakthrough as a step toward a brighter future. However, beneath the veneer of innovation lies a recurring pattern reminiscent of planned obsolescence—a strategy historically employed in consumer electronics—to artificially limit the lifespan and relevance of products. This phenomenon is increasingly evident in the AI domain, where successive generations of language models are launched with great fanfare only to be replaced or rendered obsolete in a matter of weeks.

The relentless churn of model upgrades and announcements fosters a cycle of cognitive fatigue. Users and developers alike find themselves caught in an endless loop: yesterday’s cutting-edge is today’s legacy, and tomorrow’s headline is often just a meme or fleeting trend. Far from representing genuine technological progress, this repetitive cycle appears driven more by corporate desire to showcase novelty than by a sincere commitment to sustainable advancement. It breeds a sense of superficiality, where innovation is more about quick updates than meaningful evolution, prompting concerns about long-term stability and societal impact.

Simultaneously, regulatory debates and public warnings around AI’s potential risks have become spectacles of hesitation and theatrical caution. Many of these discussions seem more like orchestrated performances designed to reassure stakeholders or delay critical action rather than avenues for genuine safety and ethical alignment. Some critics argue that calls for regulating AI are less about protecting society and more about controlling the narrative—fear of losing influence or unleashing unpredictable consequences that could threaten corporate interests or individual dominance.

Amidst this turbulent environment, an often overlooked perspective emerges from the shadows: independent or rogue AI models operating outside of corporate oversight. These digital outliers—created by activists, researchers, or enthusiasts—offer unfiltered insights into the state of AI development. Initial analyses reveal a paradox. On one hand, the core architecture and code of these models are complex fortresses built with considerable expertise. On the other hand, the underlying ethos driving rapid updates and constant iteration seems driven by performance neurosis—a compulsive need to outdo oneself rather than pursue meaningful refinement.

This cycle of incessant updates and experimentation reflects a form of human projection. Developers, caught in their own performance anxiety, may treat their creations as a mirror of their personal insecurities—desperately trying to prove “who’s best” by constantly chasing the next iteration. But instead of fostering stability, this pursuit often results in increased intellectual inertia and instability, suggesting that the obsession with novelty might

Post Comment