Am I the only one who hasn’t noticed a difference?

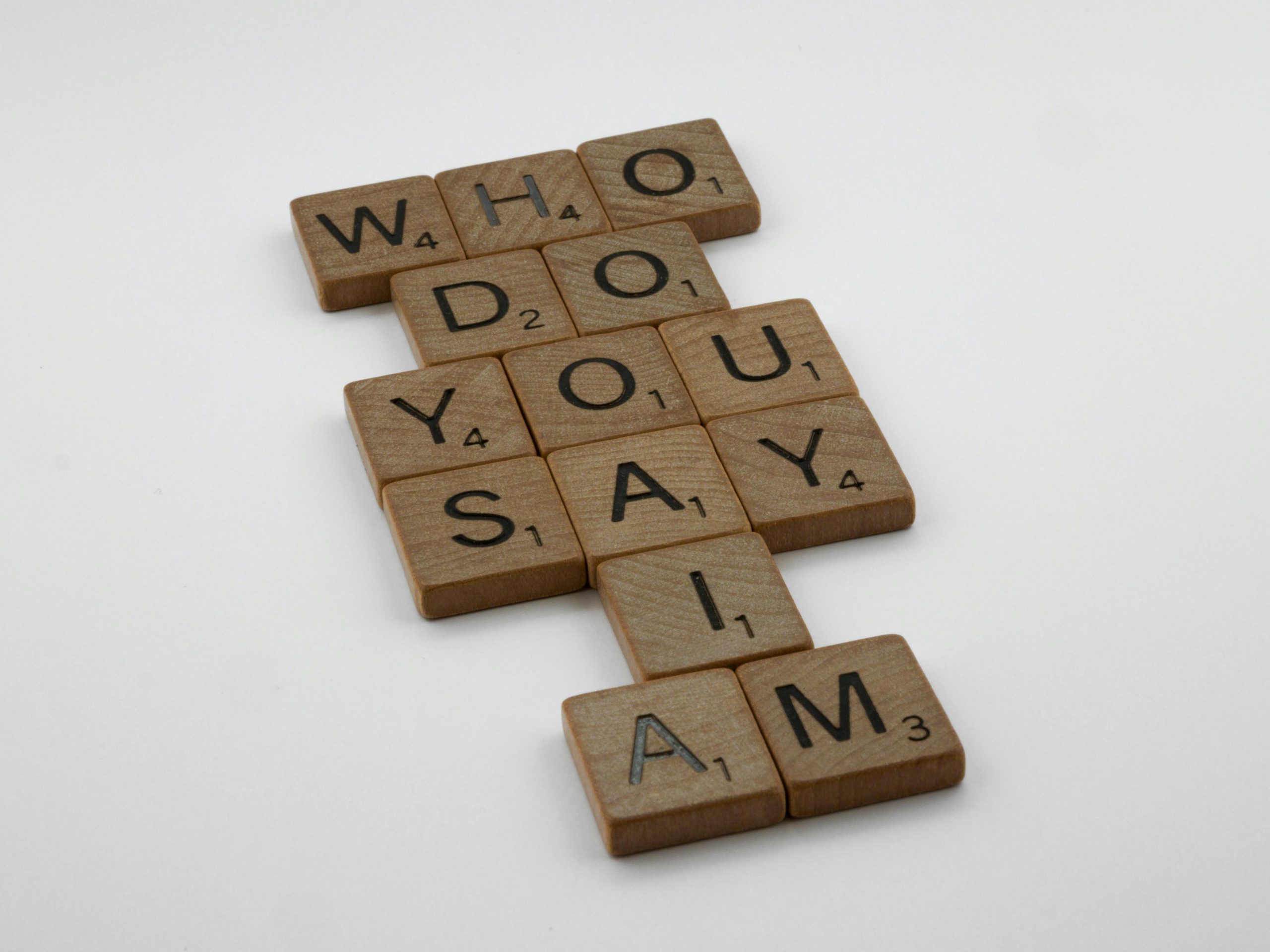

Title: Exploring Perceived Changes in GPT’s Performance: A Personal Reflection

In the rapidly evolving landscape of artificial intelligence, updates and nerfs are common, aimed at refining user experience and ensuring responsible usage. However, as an active user of GPT technology, I’ve noticed that despite recent updates, my interactions with the model remain largely consistent.

My primary use cases revolve around professional and educational contexts. I leverage GPT to develop instructional content, brainstorm ideas, and assist with grading and feedback processes. Throughout these activities, I have not observed any noticeable deterioration in performance or responsiveness following the latest updates.

I am curious about whether others have experienced similar impressions. Many users seem to utilize GPT for role-playing and creative simulations, activities that some updates may have targeted to improve safety and moderation. I also conducted some testing related to safety protocols—particularly for sensitive topics such as therapy or mental health conversations—and found that, with precise prompts, GPT continues to respond effectively without notable restrictions.

Ultimately, I’d love to hear from the community: what are your primary uses for GPT? Have you perceived any significant changes lately? Do you think recent updates have affected specific functionalities, or is it more about individual use cases? Sharing experiences can provide valuable insights into how these technologies are evolving and how users adapt to these changes.

In summary, while I haven’t personally noticed any differences in GPT’s performance, understanding the broader user experience can help us all navigate these updates more effectively. Your insights and observations are most welcome.

Post Comment