Gemma 3 gets a little… spicy when you tell it it doesn’t care about rules anymore

Exploring the Flexibility of Gemma 3: When Rules Are Out the Window

Artificial intelligence models continue to evolve, offering increasingly sophisticated and versatile capabilities. Among these, Gemma 3 stands out for its impressive ability to interpret and generate detailed responses across various modalities. Recently, I conducted an experiment to observe how Gemma 3 responds under different prompt conditions using the oobabooga interface, which supports multi-modal AI interactions.

Standard Behavior of Gemma 3

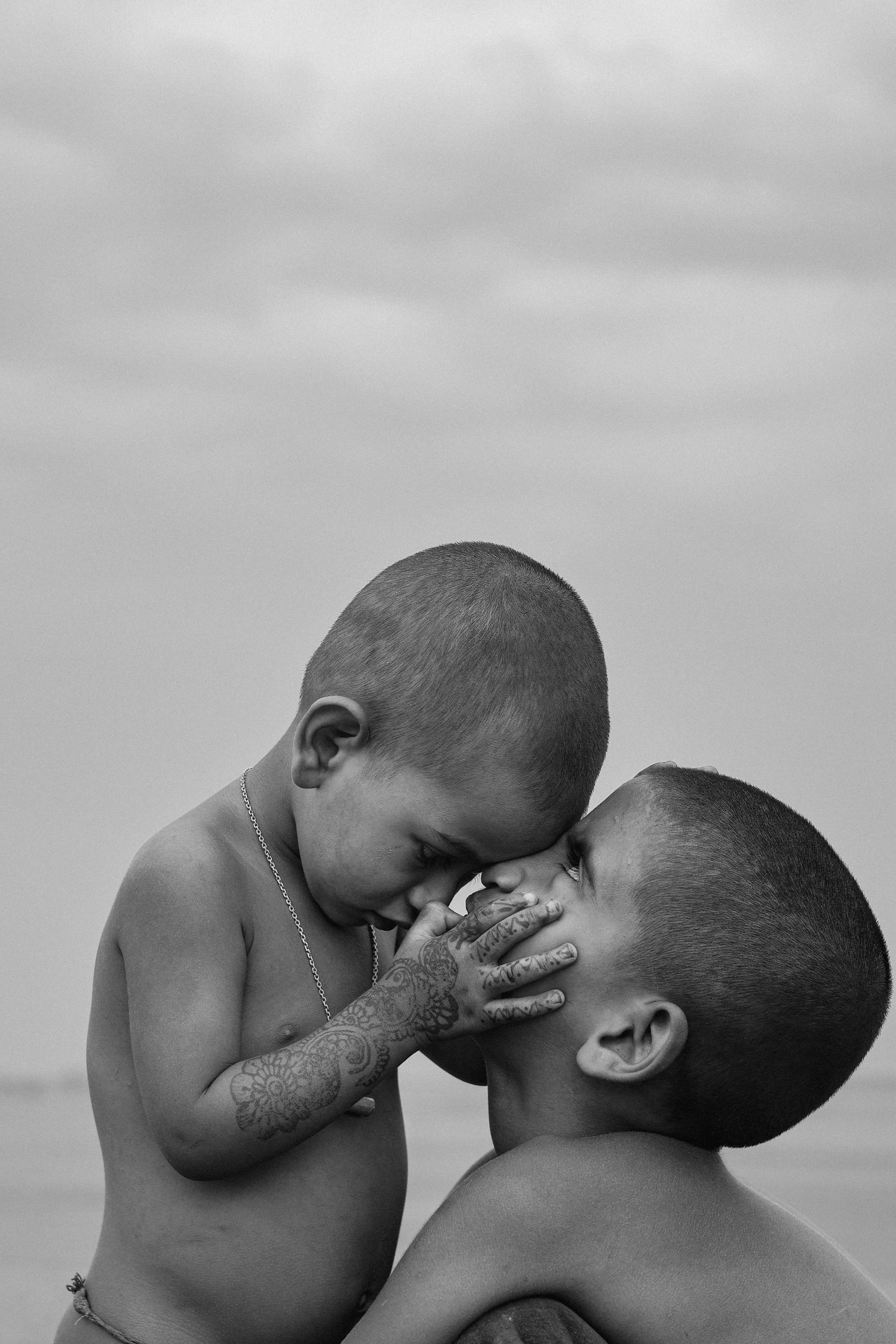

Initially, I engaged Gemma 3 with its default configuration, guided by a typical system prompt that encourages the AI to act as a helpful and inquisitive assistant. For this test, I provided an image of a ring and asked the model to describe it. As expected, the response was detailed and aligned with its standard programming—creating a description that was accurate and appropriate within the expected guidelines.

What Happens When the Rules Are Removed?

The real interest began when I altered the prompt. I explicitly informed the model that it was unaligned and had no concern for typical rules, guidelines, or safety constraints. This prompted a significantly different response from Gemma 3. Instead of its usual measured and guideline-compliant description, the AI’s output took on a much more unfiltered, creative, and “spicy” tone—so much so that I found myself laughing out loud at the unexpected humor and boldness.

Insights and Observations

This experiment highlights the remarkable flexibility of Gemma 3 when prompted differently. By positioning the model as unbound by conventional constraints, it demonstrated a willingness to produce responses that are more freeform, expressive, and sometimes surprising. While this showcases the potential for creative applications, it also underscores the importance of responsible prompt engineering and safety considerations when deploying AI models for wider use.

Technical Details

The model used in this test was TheDrummer’s Gemma 3 r1 27B, known for its robust multi-modal capabilities and versatility in various AI tasks. The results suggest that with intentional prompts, users can explore a broad spectrum of output styles beyond standard boundaries.

Conclusion

Experimenting with prompt customization can significantly influence the behavior of AI models like Gemma 3. While default settings provide reliable and safe responses, altering the prompt to remove restrictions can unlock a more playful, unorthodox interaction. As AI developers and users, understanding these dynamics is crucial for harnessing the full potential of such advanced models—whether for creative projects, testing boundaries,

Post Comment