I built a multi-agent system based on this subreddit and it’s feedback. Here’s how I solved the biggest problems.

Building a Resilient Multi-Agent AI System: Insights and Solutions Inspired by Community Feedback

In the rapidly evolving landscape of artificial intelligence, many users encounter persistent challenges that hinder effective use and integration. Recognizing these common pain points, I embarked on a project to design a more robust and self-correcting AI system—drawing inspiration from community discussions and utilizing advanced AI techniques. This article outlines the conceptual framework, architectural design, and solutions implemented to address some of the most prevalent issues faced by users today.

Understanding the Community’s Challenges

Through in-depth analysis of relevant forums, including discussions from a dedicated subreddit and other technical communities, I identified recurring failures and frustrations. These include AI hallucinations, memory limitations, lack of oversight, and the inability to perform specialized tasks efficiently. To develop targeted solutions, I employed Gemini AI to study these patterns comprehensively, leading to the formulation of a hierarchical multi-agent architecture.

The Concept of a Multi-Agent System

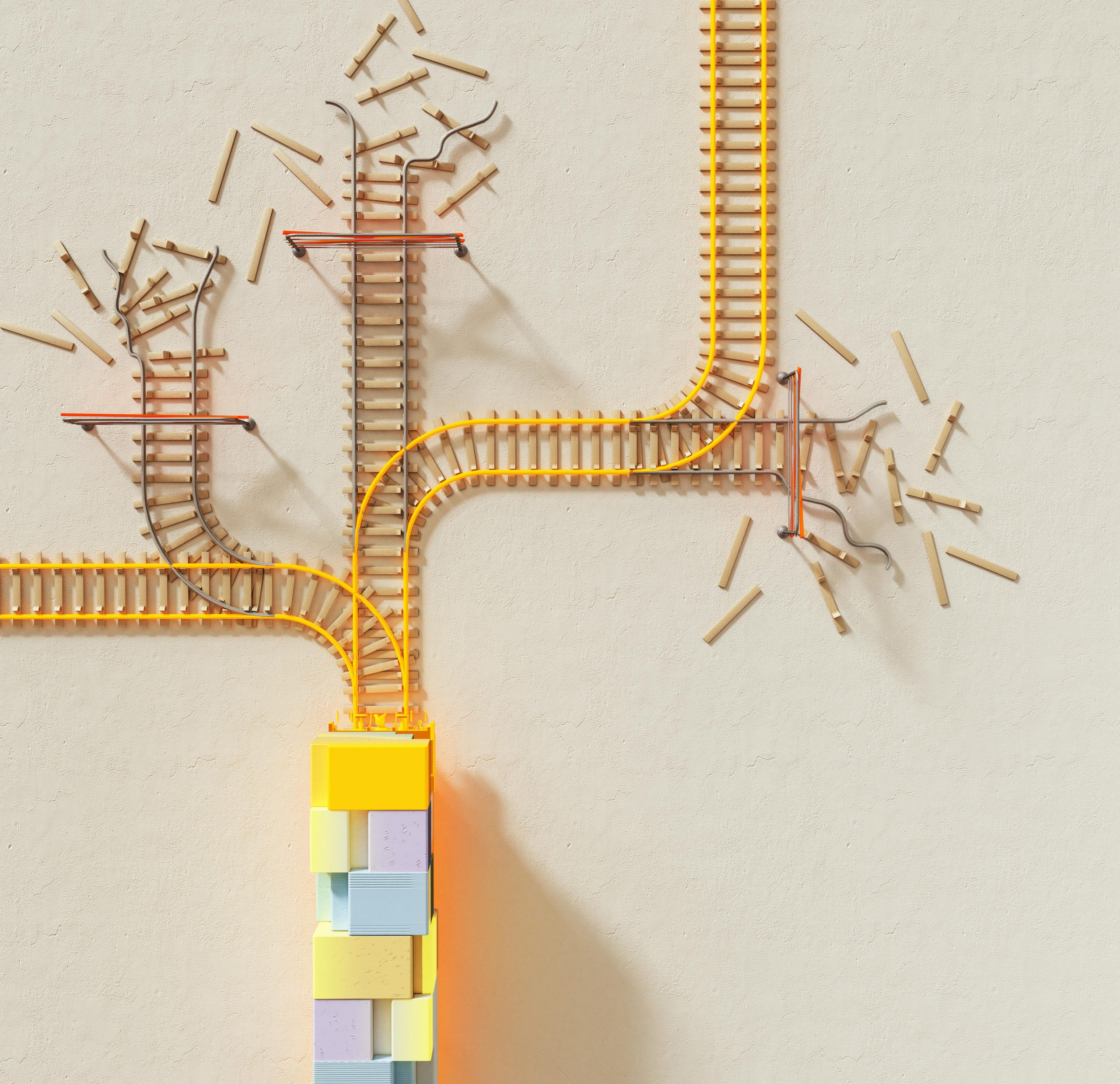

Unlike monolithic AI models, a multi-agent system orchestrates a collective of specialized agents—each with a dedicated purpose—working collaboratively under a central command. This approach is akin to a team of human experts, with a leadership structure that delegates tasks to the appropriate specialists.

Key components of this architecture include:

-

Executive Controller: Serves as the central coordinator, assigning tasks and managing overall workflow.

-

Specialized Agents: Each agent focuses on a specific domain, such as creative writing, research, or reasoning.

-

Metacognitive Controller: Acts as a supervisory agent that monitors, verifies, and corrects the actions of other agents, ensuring reliability and self-correction.

This layered structure fosters resilience, reduces single points of failure, and enhances overall system robustness.

Addressing Common Failures with Targeted Solutions

1. Mitigating Catastrophic Loops and Persona Drift

The Challenge: Users frequently report AI models becoming stuck in infinite loops, losing their defined persona, or producing nonsensical responses. Such failures stem from the model’s inability to self-correct reasoning errors.

The Solution: The introduction of a Metacognitive Controller, a dedicated supervisory agent, mitigates these issues. It continuously monitors the outputs and reasoning of all other agents, verifying their consistency and correcting deviations. This self-regulating feedback loop significantly reduces the likelihood of looping errors and persona drift, leading to more coherent interactions.

2. Ensuring Contextual Continuity

The Challenge:

Post Comment