Uncovering How ChatGPT Can Detect Your Location Using a Photo

Unveiling How AI Can Be Manipulated to Alter Image Location Identification

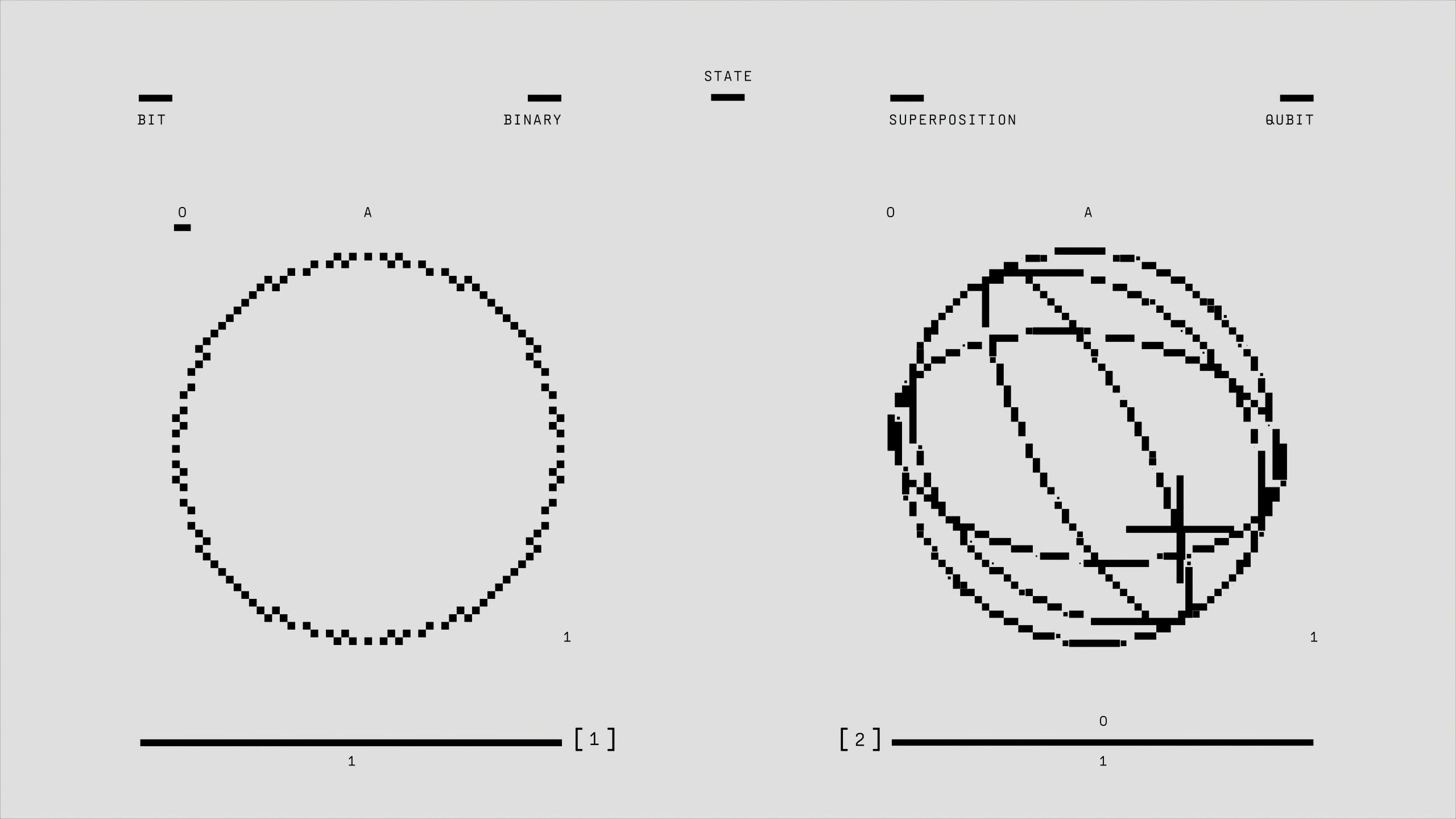

In the rapidly evolving landscape of artificial intelligence, one intriguing capability of models like ChatGPT is their ability to analyze images and infer their geographical origins. While this feature is remarkably advanced, recent developments demonstrate that it’s possible to intentionally deceive these AI systems using simple image perturbation techniques.

By subtly modifying the pixels within a photograph, it’s feasible to trick AI models into misclassifying the location depicted. For instance, with targeted adjustments, an image of San Francisco could be identified by the AI as a scene from Boston. This highlights both the impressive nature of AI image recognition and the potential vulnerabilities that come with it.

There’s talk within the tech community about developing a free service that leverages this concept, allowing users to experiment with manipulating an image’s perceived origin. Such a tool could have both creative applications and privacy implications, emphasizing the importance of understanding AI’s limitations and potential for misuse.

If this topic interests you, stay tuned—more detailed insights and developments are on the horizon. Your feedback and thoughts are welcome, as we explore the fascinating interplay between AI capabilities and their susceptibility to manipulation.

Post Comment