I am confident that AI will not intensify the dissemination of false information.

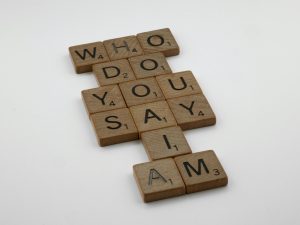

Will Artificial Intelligence Worsen the Disinformation Crisis? A Thoughtful Perspective

In recent discussions, a common concern has emerged: that AI-driven content creation might significantly amplify the spread of misinformation and disinformation online. The reasoning is straightforward—if AI can generate vast amounts of seemingly credible or “junk” content at scale, wouldn’t that lead to an overwhelming surge in false or misleading information, especially across social media platforms?

At first glance, this hypothesis seems plausible. When observing patterns across social media channels, it’s evident that AI-generated content—ranging from superficial videos to text snippets—is increasingly prevalent. It appears intuitive to assume that the volume of disinformation could escalate dramatically because of this.

However, upon closer examination, I remain skeptical that AI alone will drastically worsen the disinformation problem. Consider a typical user engaging with short-form content on platforms like TikTok. No matter whether the content is human-made or AI-generated, the average viewer might consume around 100 to 150 videos in a single session. The presence of AI-generated content might make some videos appear more polished or convincing, but it doesn’t realistically increase the total number of videos viewed or the amount of disinformation encountered.

One might argue that a greater volume of content, especially if inundated with misinformation, could lead to increased exposure. Yet, historically, humans have been exposed to an enormous amount of disinformation, much of which is created by humans themselves—think fake news articles, manipulated images, or false claims propagated by communities and media for years. This existing influx means that even a significant rise in AI-generated false content might not substantially alter my personal information landscape since I already encounter a saturation of disinformation.

Moreover, our content consumption is heavily influenced by personal interests and entertainment preferences. For example, I typically watch a mix of cat videos, humorous fails, political content, and miscellaneous clips. My attention isn’t necessarily drawn more to disinformation or politically charged material; content formats, not just the source, shape what I consume. In this context, AI-generated content, whether truthful or misleading, doesn’t seem to skew my exposure significantly more than content I would have encountered previously.

It’s also worth considering the nuanced ways disinformation is delivered. Sometimes, it’s less about outright falsehoods and more about subtle framing or editing. For instance, a heavily edited clip of a celebrity or politician can spread misinformation without appearing blatantly false—something that platforms like TikTok or Instagram facilitate through quick cuts, memes, and sensational headlines. These

Post Comment