The Fascinating Force You Can’t Ignore: A Hidden Danger to Our Free Will

Title: The Hidden Threat to Our Autonomy: How Our Attention Is Being Undermined

In discussions about artificial intelligence, many envision dramatic scenarios—robotic overlords, superintelligent machines taking control, or dystopian futures where machines dominate in chaos. These images tend to evoke fear of sudden, loud upheavals. However, the most insidious threat to our freedom isn’t an abrupt event; it’s a gradual, pervasive trend that subtly erodes what makes us human: our attention.

Understanding the foundation of our worldview sheds light on this issue. Our beliefs about ourselves and the world around us are largely shaped by the vast array of sensory information we gather throughout our lives. From the language we speak to who we trust and our political perspectives, our entire understanding of reality is an amalgamation of received data.

This process isn’t unique to humans; all animals with brains learn from their environment, accumulating survival-relevant information over time. Yet, humans possess a unique superpower—and a profound vulnerability—our ability to pass on complex ideas through symbols. Whether via language, stories, writing, or art, this symbolic communication forms the cornerstone of our civilization.

The challenge begins with the relatively recent advent of written language, which only emerged around 5,000 years ago. For most of history, literacy was limited, and worldview formation depended heavily on direct experience and the influence of a literate elite. The introduction of television transformed this landscape further, providing a new medium—one that did not require literacy—to transmit ideas. Suddenly, the fraction of our worldview shaped by symbolic means expanded significantly.

Growing up in the late 20th century, I remember a household with a single television and no personalized content; I watched what was available, often without much interest. Today, however, screens are omnipresent, and sophisticated algorithms curtail their knowledge of us. They constantly tailor content to our preferences, creating a feedback loop that more deeply influences our perceptions.

This technological evolution is unprecedented. Imagine a reality where an algorithm understands your preferences better than you do yourself—where a large portion of your worldview is curated rather than experienced firsthand. That scenario is no longer distant; it’s already unfolding. Each passing year, these influences intensify, subtly but powerfully shifting how we understand ourselves and the world.

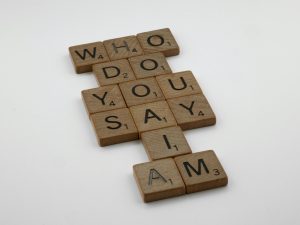

The real danger doesn’t lie in dystopian robot armies or sudden AI dominations. Instead, it’s in the quiet, ongoing takeover of our symbolic environment—the stories, images, and

Post Comment