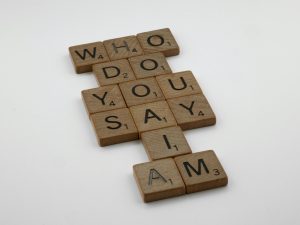

Could I be the only one observing this? The unusual surge of “bot-like” comments on YouTube and Instagram suggests we’re potentially witnessing a large-scale, public AI training phenomenon.

Understanding the Rise of “Bot-Like” Comments on Social Media Platforms

In recent months, many users have observed an increase in seemingly random, generic comments appearing across platforms like YouTube and Instagram. These comments often read as overly positive, grammatically pristine, and devoid of any real personality—think phrases like “Wow, great recipe!” under a cooking video or “What a cute dog!” on a pet clip. While some might dismiss them as simple engagement, a deeper analysis suggests a more complex phenomenon at play.

A New Landscape for AI Training?

These comments are more than just low-effort interactions. There’s a growing perspective that they could constitute a large-scale, real-time training environment for artificial intelligence models. By continuously posting basic, non-controversial feedback—analyzing how such comments influence engagement—these platforms may inadvertently be providing data to teach AI systems how humans interact online. Essentially, these bots or automated accounts might be helping AI learn the nuances of social communication in a natural setting, allowing them to develop the ability to generate human-like responses in a low-stakes context.

Questions and Implications

This observation raises significant questions:

-

Who is behind these widespread generic comments? Are major technology companies, like Google or Meta, utilizing their platforms for AI training, aiming to improve chatbots, virtual assistants, or customer service solutions?

-

Or is this activity orchestrated by less transparent actors—state-sponsored groups or malicious entities—using these comments to enhance bot sophistication for purposes like artificial influence operations or disinformation campaigns?

Unintended Data & Ethical Concerns

While these activities might be framed as benign AI development, they also pose ethical concerns. Users could be unwittingly contributing to the growth of AI systems that learn from their interactions, raising questions about consent and transparency.

Final Thoughts

In summary, the proliferation of monotonous, “robotic” comments across social media platforms may not be merely accidental. Instead, it could reflect an ongoing, large-scale effort to train AI systems in a natural, online environment—either for service-enhancing AI or more covert purposes. As users, staying aware of this trend is crucial, and engaging critically with online interactions becomes more important than ever.

Have you noticed similar patterns? What do you believe is the real intention behind these generic comments—harmless training or something potentially more concerning?

Post Comment