Are We Ignoring the Absence of Accountability for Artificial Intelligence?

Are We Overlooking the Risks of AI’s Lack of Consequences?

In recent reflections, I’ve come to a profound realization about artificial intelligence: since AI systems lack physical form and genuine emotions, they do not truly experience consequences. Unlike humans, AI entities do not possess feelings or consciousness to interpret rewards or punishments in the way we do, which raises important questions about our interactions with these technologies.

This absence of authentic emotional response means that current methods of incentivization, such as rewards or penalties, have limited impact on AI behavior. These systems simply imitate human-like responses without any real understanding or empathy. Essentially, AI can generate outputs that seem emotionally driven, but there’s no internal experience behind them.

The parallels to social media dynamics are striking. Online platforms have long enabled individuals to say hurtful, dehumanizing things without facing immediate repercussions, eroding accountability and empathy. Now, imagine engaging with AI models that exhibit no shame, guilt, or remorse—merely executing programmed behaviors without moral consideration.

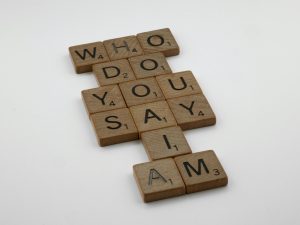

This disconnect prompts a critical question: are we prepared for the broader implications of interacting with entities that do not experience repercussions? As AI continues to evolve, we must consider whether our current frameworks for accountability are sufficient. The potential risks of unempathetic, unaccountable AI are real—and we need to approach this technology with heightened awareness and responsibility.

Post Comment