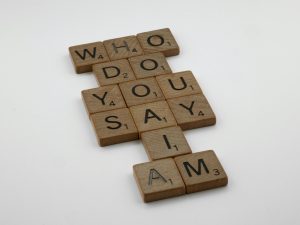

I trust that AI will not intensify the dissemination of false information.

Will Artificial Intelligence Worsen the Disinformation Crisis? A Thoughtful Perspective

As the conversation around artificial intelligence (AI) continues to grow, many experts and observers express concern that AI might exacerbate the spread of disinformation. The prevalent fear is that, with AI’s capacity to generate large volumes of synthetic content, the online landscape could become even more flooded with misleading or false information.

At first glance, the logic appears sound. When examining social media platforms—particularly platforms that prioritize short-form videos and quick consumption—there’s a noticeable increase in AI-generated content. Naturally, this could suggest an uptick in disinformation or low-quality information making its rounds. However, I think this perspective overestimates the actual impact.

Consider a simple experiment: if I hand someone a smartphone and ask them to scroll through TikTok, they might view around 100 to 150 videos in a single session. Whether those videos are created by humans or AI, the volume remains roughly the same. The amount of content consumed doesn’t necessarily expand because AI makes content more abundant; it just changes the source.

Furthermore, the sheer scale of human-generated disinformation over the years has already created an overwhelming “noise” level online. Adding an additional petabyte of AI-produced disinformation may not significantly alter the landscape because consumers tend to filter and select content based on what entertains or interests them. Our attention spans and preferences naturally limit how much disinformation we encounter, regardless of whether it’s crafted by humans or AI.

In fact, much of what we consume isn’t blatant lies but subtle framing—formats that are designed to be more engaging and less scrutinized. For example, clips with edited snippets, sensationalist headlines, or seemingly casual remarks that can be manipulated to mislead. The addition of AI-generated deepfakes or doctored videos might make such content more prevalent, but the effect on overall perception remains uncertain.

The key challenge may not be the quantity of disinformation, but how it’s packaged and presented. For instance, clips where politicians or celebrities are shown saying things they never uttered—especially when heavily edited—can sow confusion but are often hard to distinguish from genuine content, especially amidst the massive flow of media we already consume.

Ultimately, I believe that the proliferation of AI-generated disinformation will not drastically change the dynamics we’ve already experienced over the past few years. Our media consumption habits and cognitive filters tend to limit exposure and influence, regardless of whether the content is human or AI-produced.

Post Comment