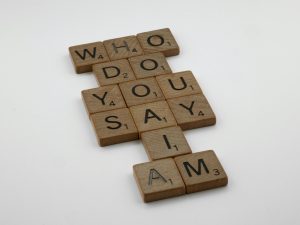

Are Our Concerns About AI’s Lack of Conscience Exaggerated?

The Ethical Dilemma of AI and Lack of Accountability: Are We Overlooking the Implications?

As artificial intelligence continues to evolve and integrate into various facets of our lives, a thought-provoking concern is emerging within tech communities and ethical circles alike: Should we be more worried about the absence of consequences for AI systems?

Recently, I experienced a profound realization: since AI lacks a physical form and genuine emotional experiences, it cannot truly grasp or suffer the repercussions of its actions. Unlike humans, AI operates without feelings, remorse, or shame, meaning that the concepts of reward and punishment are largely symbolic rather than experiential.

This situation bears a troubling resemblance to the social media landscape, where anonymity and lack of accountability have led to heightened instances of toxic and dehumanizing interactions. When individuals can engage in harmful speech without facing meaningful consequences, the online environment deteriorates into a space devoid of empathy and accountability.

Now, consider the conversation with a language model—an AI that can mimic emotional expressions but does not genuinely experience any. It has no capacity for remorse or guilt; it merely processes input and generates responses based on patterns. This disconnect raises a critical question: If AI systems are incapable of suffering consequences, what ethical responsibilities do we bear in how we develop and deploy them?

The implications are profound. As society relies more heavily on AI, understanding and addressing this lack of accountability becomes essential. Without proper oversight and ethical frameworks, we risk fostering a future where artificial agents can operate without moral consideration, potentially leading us down a precarious path.

In summary, the conversation around AI ethics must extend beyond technical capabilities to include the fundamental question of accountability. Recognizing that AI cannot experience consequences is a crucial step in ensuring we develop responsible and humane approaches to this transformative technology.

Post Comment