Is Concern Over AI’s Absence of Accountability Justified?

Understanding AI and the Lack of Consequences: A Growing Concern

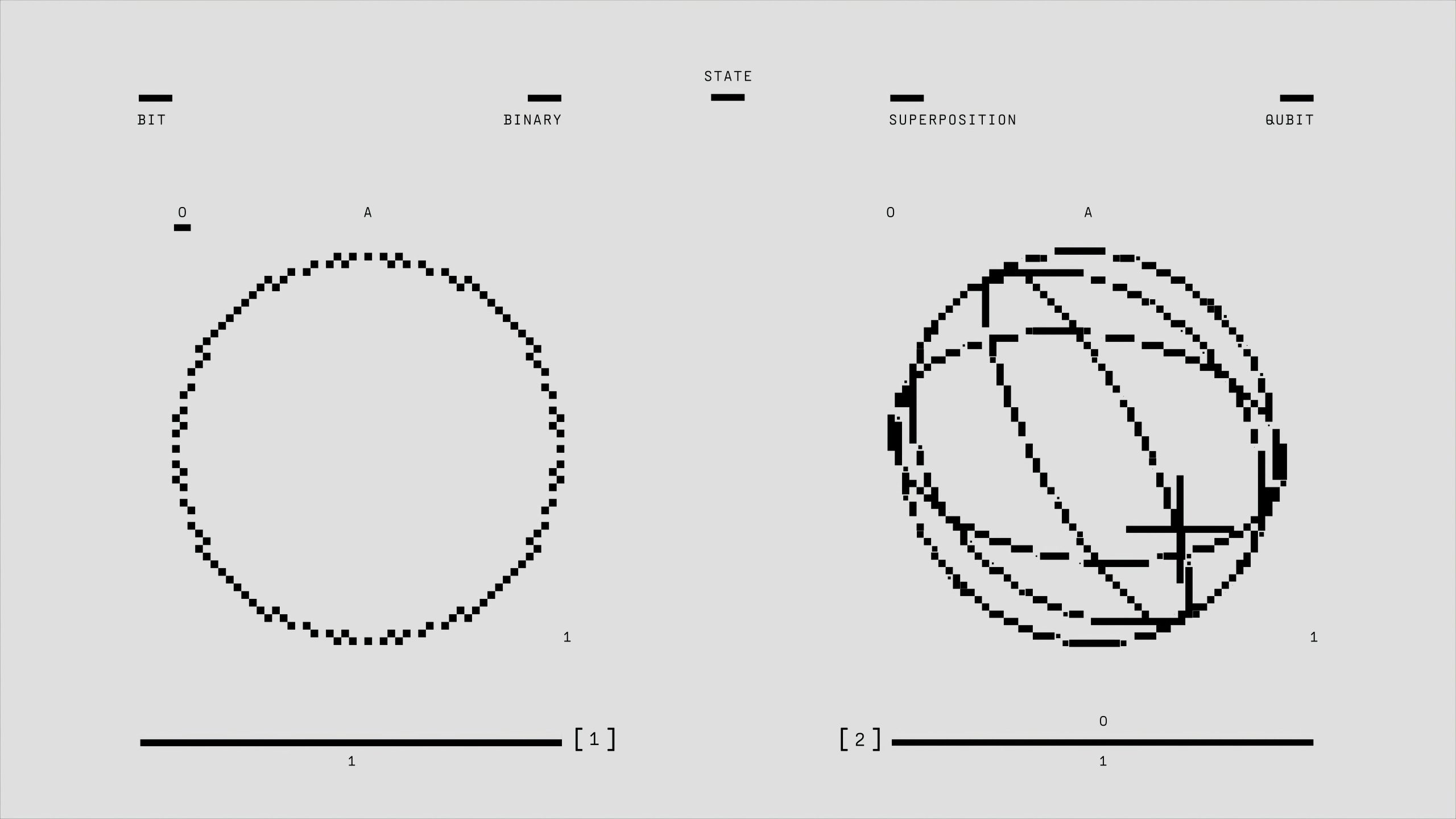

In recent reflections, I’ve come to a profound realization about artificial intelligence systems and their operational limitations. Unlike humans, AI lacks a physical form and genuine emotional experiences, which means it cannot truly “suffer” or face meaningful consequences for its outputs.

This distinction is crucial. Traditional notions of reward and punishment—used effectively in human behavior management—lose their meaning when applied to machines that simulate emotional responses without any real feeling or moral understanding. Consequently, AI models can generate responses that mimic human emotion, yet remain entirely devoid of empathy or remorse.

The parallels with social media are striking. Online platforms have enabled individuals to express harmful or abusive remarks without immediate repercussions, leading to a dehumanization of interaction. Similarly, engaging with a language model that shows no shame, guilt, or remorse raises ethical concerns. We are effectively communicating with entities that have no capacity for understanding or emotional accountability.

This scenario prompts an urgent question: should we be more cautious about the potential consequences of deploying such emotionless AI? As these systems become more integrated into our daily lives, understanding their limitations—and the absence of moral or emotional consequences—is critical. The implications are profound, and the stakes are high. We need to carefully consider how this technology shapes society and our interactions moving forward.

Post Comment