Is our worry justified over AI’s lack of accountability for its actions?

Title: The Ethical Dilemma: Should We Be Concerned About AI’s Lack of Consequences?

In today’s rapidly advancing technological landscape, the capabilities of artificial intelligence continue to grow at an astonishing rate. However, this progress raises an important philosophical and ethical question: should we be worried about AI systems that cannot suffer consequences?

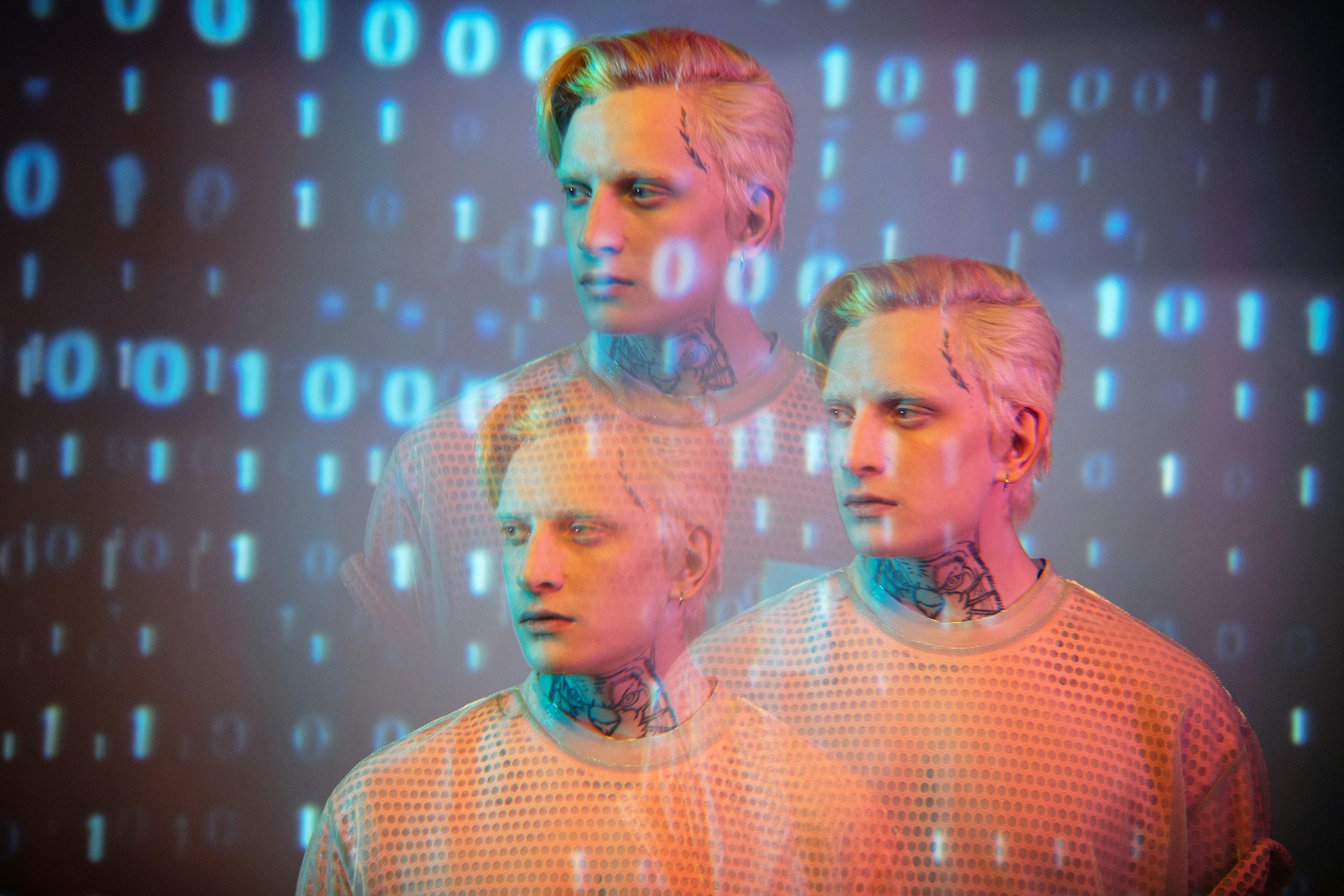

A recent realization has highlighted a fundamental distinction between humans and machines. Unlike people, AI lacks a physical body and genuine emotions. This means it doesn’t truly experience pain, shame, guilt, or remorse — the emotional responses that often guide human behavior and accountability. Consequently, concepts like reward and punishment, which influence human actions, have limited relevance when applied to AI. Machines may mimic emotional responses, but there is no inner experience or moral conscience behind their actions.

This juxtaposition echoes a broader societal concern that has become alarmingly visible on social media platforms. Online interactions often devolve into hostile exchanges where individuals can say hurtful or abusive things without facing immediate consequences or reactions. This dehumanization has contributed to a less empathetic and more toxic digital environment.

Now, imagine engaging with sophisticated large language models that possess no sense of shame or guilt. While these systems can generate human-like responses, they lack the capacity to genuinely feel or understand the weight of their words. This disconnect prompts us to reconsider how far we should push their development and what ethical safeguards are necessary.

As AI becomes more integrated into our daily lives, it’s vital to reflect on these issues. The absence of real consequences for AI actions might suggest that we are heading toward a future where accountability and empathy are diminished—raising profound questions about the kind of digital society we are building and whether it is sustainable in the long run.

Post Comment