Forget prompts. This method strips bias out of the model itself

A New Approach to Mitigating Bias in AI Language Models

In the ongoing effort to build fairer and more responsible AI systems, many organizations rely on clever prompts and safety guidelines to curb biases in language models. However, emerging research indicates that these methods may only offer surface-level solutions. Even minor contextual tweaks—such as introducing specific company names or describing a job as “selective”—can inadvertently reignite racial and gender biases, even in state-of-the-art models like GPT-4, Claude 4, and Mistral.

A Paradigm Shift in Bias Reduction

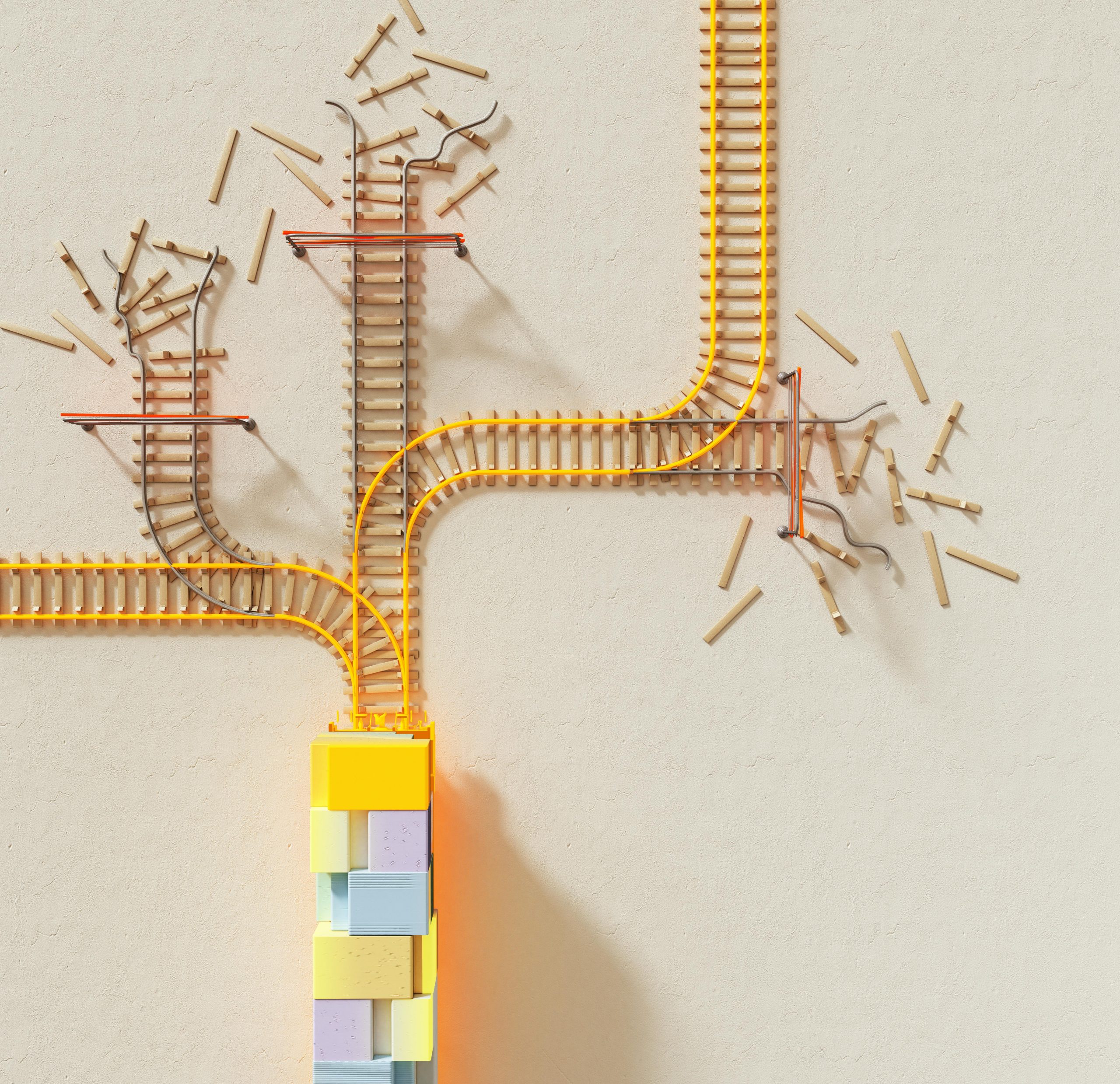

Rather than focusing solely on instructing models what to avoid, recent studies suggest a more effective strategy: essentially removing the model’s ability to pick up on demographic signals altogether. This novel technique involves modifying the internal workings of the model at inference time—without altering its core architecture—to prevent it from recognizing or using sensitive demographic cues.

How Does It Work?

-

Identify Bias-Related Signals: The method begins by pinpointing the specific pathways within the model’s activations that correspond to racial or gender identifiers.

-

Neutralize the Signals: Once these pathways are located, any new input is subtly shifted toward a neutral midpoint, effectively masking demographic information from the model.

-

Seamless Integration: This adjustment is applied consistently across all layers during the inference process, ensuring that the model’s fundamental behavior remains unchanged while its ability to detect demographic cues is significantly diminished.

Proven Effectiveness

Implementing this technique yielded promising results. In real-world hiring simulations, the biased tendencies of models dropped to nearly zero, all while maintaining strong performance levels. Crucially, models remained fair and unbiased, even when working within complex contextual scenarios where prompt-based methods had previously fallen short.

Key Takeaway

Relying solely on laboratory tests or prompt-based tactics for fairness may give a false sense of security. To genuinely ensure equitable behavior from AI systems in diverse real-world applications, we need more robust, internal methods—like this bias-neutralization approach—and comprehensive testing strategies. Only then can we move closer to deploying AI that is not just powerful, but truly fair and trustworthy.

Post Comment