Version 107: Exploring Claude’s Mind: Intriguing Perspectives on LLMs’ Planning and Hallucination Behaviors

Unveiling the Inner Workings of LLMs: Insights from Claude’s Cognitive Processes

In the realm of artificial intelligence, large language models (LLMs) have often been labeled as “black boxes,” generating remarkable outputs while leaving users puzzled about their inner mechanisms. Recently, groundbreaking research conducted by Anthropic has provided us with an enlightening glimpse into the workings of Claude, one of their advanced AI systems, akin to using an “AI microscope” to examine its thought processes.

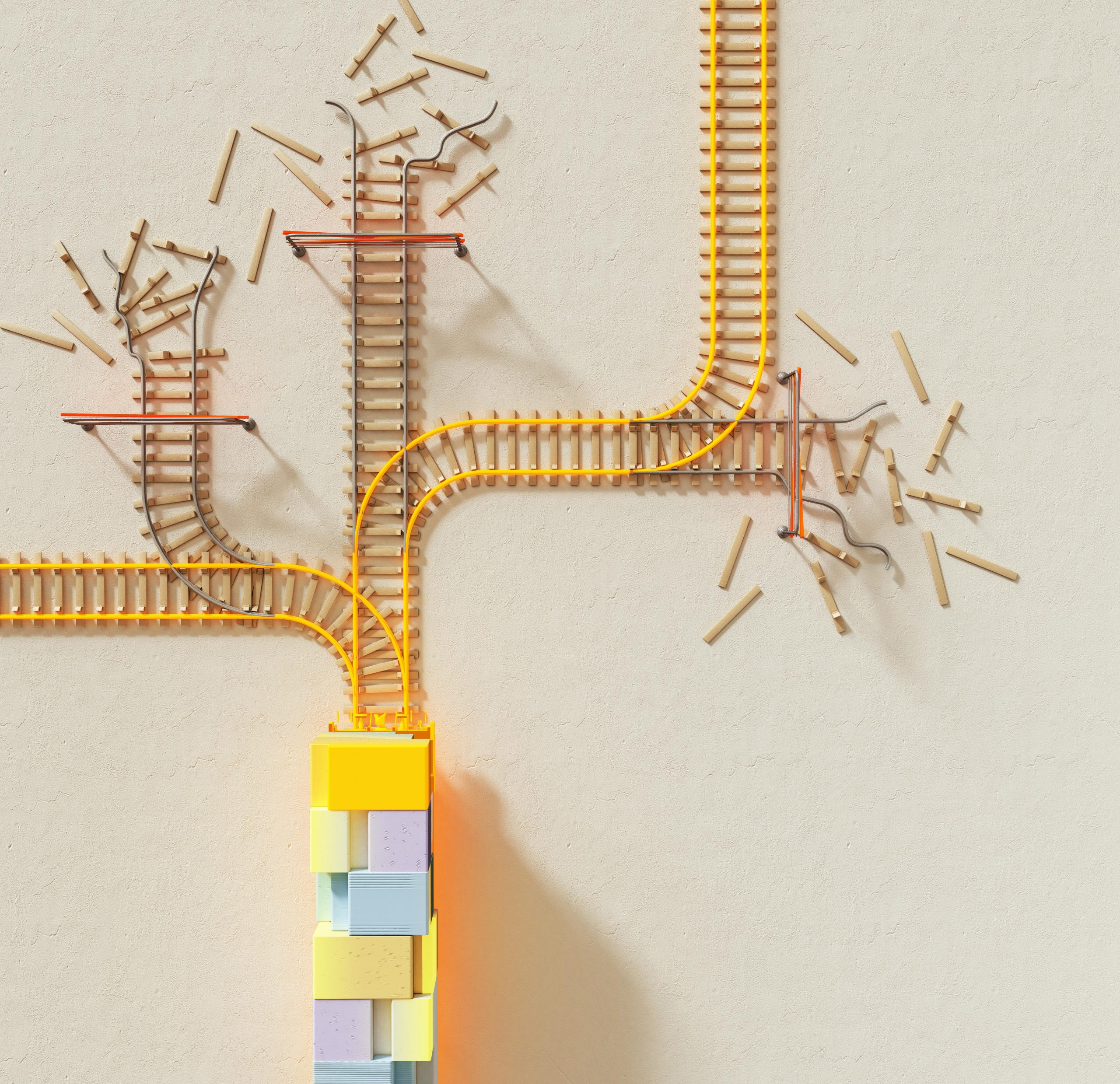

This research not only focuses on the outputs produced by Claude but delves into the intricate internal “circuits” that activate in response to various concepts and behaviors. It’s akin to exploring the biological underpinnings of AI cognition.

Several intriguing discoveries have emerged from this investigation:

A Universal Framework for Thought

One of the standout revelations is that Claude employs a consistent set of internal features or concepts—such as “smallness” or “oppositeness”—across different languages, including English, French, and Chinese. This suggests that before words are selected for expression, there exists a universal cognitive framework that guides understanding.

Strategic Planning Capabilities

Contrary to the common perception that LLMs operate solely by predicting the next word, experiments demonstrated that Claude can actually plan multiple words in advance. Impressively, it even anticipates elements such as rhymes in poetry, showcasing a level of foresight that goes beyond simple word association.

Identifying Hallucinations

Arguably the most significant aspect of this research is the development of tools that can discern when Claude is fabricating reasoning to support incorrect answers. Instead of genuinely computing responses, there are instances where the model produces plausible-sounding outputs that lack factual accuracy. This ability to detect such “hallucinations” points toward important implications for enhancing the reliability of AI-generated content.

The strides made in the interpretability of AI through this research represent a significant leap toward creating more transparent and trustworthy systems. By shedding light on the underlying reasoning processes, we can better diagnose discrepancies and enhance the safety of AI technology.

What are your thoughts on this intriguing exploration into the “biology” of AI? Do you believe that a deeper understanding of these internal processes is essential for addressing issues like hallucination, or do you see alternative strategies as more viable? We invite you to share your insights in the comments below!

Post Comment