I believe AI will not worsen the dissemination of false information.

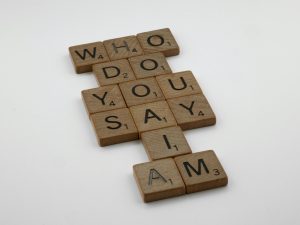

Will AI Really Worsen the Disinformation Crisis? A Closer Look

As AI technology continues to evolve and permeate our digital lives, a common concern is that it may significantly amplify the spread of misinformation. Many worry that generative AI will facilitate an avalanche of “junk” content, making it harder than ever to discern truth from falsehood.

However, upon closer reflection, I believe this fear might be overstated. To understand this, let’s consider how we consume content across platforms like TikTok and other social media. Whether the content is AI-generated or created by humans, the volume of material we process remains relatively constant. For most of us, a typical scroll through short-form videos results in viewing around 100 to 150 clips, regardless of their origin.

Introducing AI-generated content doesn’t necessarily increase the number of videos we consume; it just shifts the source. The core point is that the entire digital diet is already saturated with a vast amount of misinformation that humans have produced over the years. This enormous backlog of content means that even if AI adds a petabyte of new disinformation, it may not substantially impact what we actually encounter or believe—our attention is limited, and our consumption patterns remain focused on what we find engaging.

Moreover, the types of content we tend to watch are often resilient to manipulation. For instance, our viewing preferences tend to cluster around certain categories—entertaining cat videos, funny mishaps, emotional political commentary, and assorted miscellaneous clips. These categories form the ‘pie chart’ of our viewing habits. The proportion of disinformation we consume has remained relatively stable over the past five years, and there’s no clear reason to believe this will change drastically simply because AI can generate more content.

That said, AI has the potential to introduce subtler forms of deception. For example, edited clips that portray politicians or celebrities saying things they never did. These doctored videos can be highly convincing and harder to detect than straightforward lies. However, considering the vast scale of existing misinformation and the way most individuals consume media, I don’t see this as a game-changer in the broader disinformation landscape.

In conclusion, while AI can facilitate new methods of producing deceptive content, I believe its overall impact on the quantity of disinformation reaching the average user may be limited. Our consumption habits, content preferences, and the sheer volume of existing misinformation already set a certain boundary.

What are your thoughts on AI’s role in shaping the future of digital

Post Comment