Variation 159: “Ought We to Worry More About AI’s Lack of Consequences?”

The Ethical Dilemma of AI and the Absence of Consequences

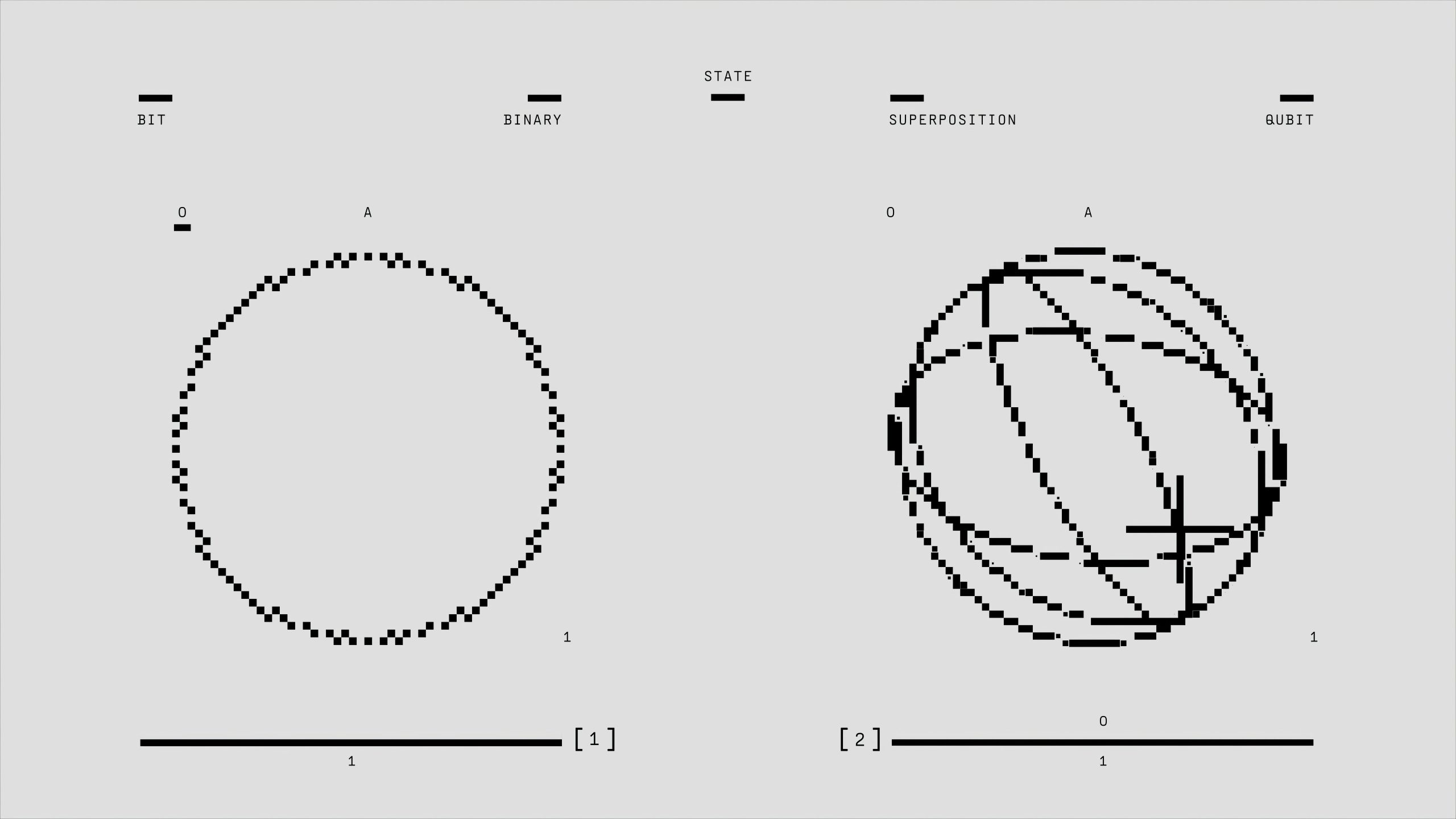

As artificial intelligence continues to advance at a rapid pace, it’s essential to consider the ethical implications of its capabilities and limitations. Recently, I had a profound realization about the nature of AI: without a physical body or genuine emotions, AI systems are inherently incapable of experiencing consequences in the way humans do.

Unlike humans, who feel shame, remorse, and accountability when faced with the repercussions of their actions, AI entities operate purely based on code and algorithms. Rewards and punishments influence their behavior only insofar as they are programmed to respond to certain inputs, but there is no true emotional or moral engagement behind their responses.

This perspective draws a parallel with the rise of social media, where anonymity and distance have led to an increase in harmful and dehumanizing interactions. People can say things online they would never say face-to-face, often without fear of repercussions, which in turn erodes empathy and accountability.

When engaging with AI language models—regardless of how human-like they seem—it’s crucial to remember that they are devoid of shame, guilt, or remorse. They do not possess consciousness or moral understanding, making their behavior fundamentally different from that of humans.

Given these realities, we must ask ourselves: should we be more concerned about the impact of AI systems that lack the capacity to suffer consequences? How do we ensure ethical use and development of such technologies in a way that safeguards human dignity and societal values? The conversation about AI ethics is more urgent than ever, and understanding its limitations is a vital part of that dialogue.

Post Comment