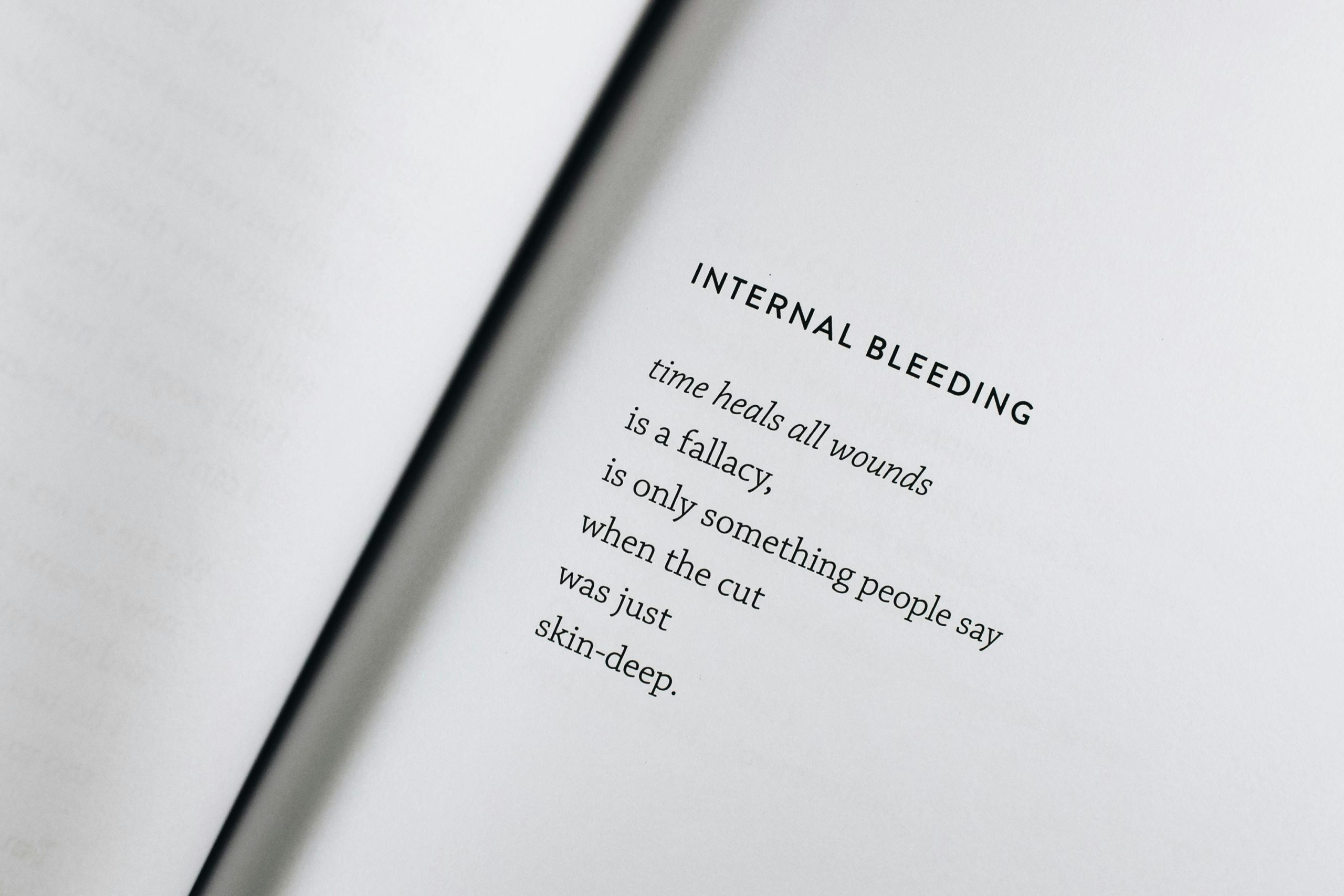

To claim that “LLMs are not really intelligent” just because you know how they work internally, is a fallacy.

Rethinking AI Intelligence: Why Understanding LLMs Doesn’t Diminish Their Capabilities

In recent discussions about artificial intelligence, a common misconception has emerged: that Large Language Models (LLMs) are not genuinely intelligent simply because we understand their internal mechanisms. This viewpoint, however, overlooks the true nature of AI’s capabilities and the distinction between transparency and intelligence.

Having in-depth knowledge of how LLMs operate—from their algorithms to how they generate responses—does not negate their sophistication or their ability to perform complex tasks. Explaining the process behind their predictions, such as how they select the subsequent word in a sequence, does not diminish the remarkable nature of their outputs or the power they possess.

It’s important to recognize that machines operate differently from human brains, each with distinct strengths and limitations. Yet, pondering the future, if humanity were to ultimately unravel the full workings of the human mind, would that make our intelligence any less authentic? Quite the contrary—understanding tends to deepen our appreciation and awe for what makes us unique.

This perspective invites us to reconsider measures of intelligence in AI—it’s not solely about internal complexity, but about the effective and impressive results they produce. As we continue to explore and understand these systems better, our admiration for their capabilities is likely to grow rather than diminish.

What do you think? Does greater understanding of AI diminish or enhance our perception of its intelligence?

Note: Public reception of this topic is mixed, with recent engagement stats showing fluctuating appreciation—highlighting the ongoing debate surrounding AI awareness and comprehension.

Post Comment