Target Propagation: A Biologically Plausible Neural Network Training Algorithm

Exploring Target Propagation: A Biologically Inspired Approach to Neural Network Training

In the quest to develop more biologically plausible neural network algorithms, target propagation emerged in 2015 as a noteworthy alternative to the traditional backpropagation method. Despite its intriguing premise, it hasn’t gained widespread adoption in the deep learning community. So, what are the reasons behind this, and what can we learn from this approach?

One of the most striking aspects of target propagation is its computational efficiency—or lack thereof. Training a simple model like MNIST to approximately 39% accuracy on a CPU takes around five minutes, highlighting its significantly slower training speed compared to conventional methods. This sluggish performance is a considerable barrier to practical application, especially when scaling up to more complex tasks.

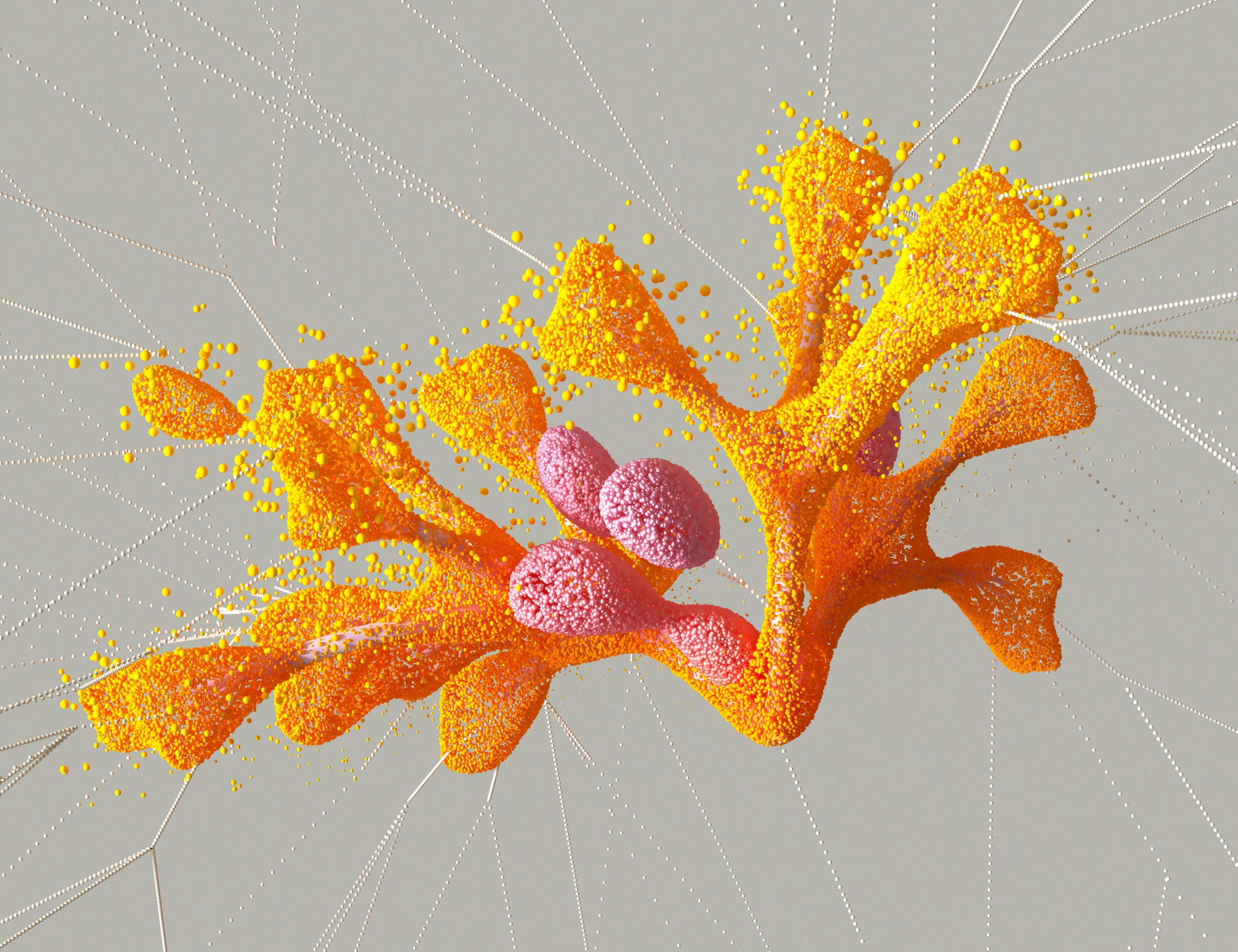

Nevertheless, the conceptual foundation of target propagation remains compelling. Instead of relying on gradient calculations, the algorithm focuses on identifying local inverse mappings—referred to as “targets”—for each layer in the network. This biologically inspired strategy aims to mimic how real neural systems might learn, offering a more plausible model of brain function.

For those interested in a deeper understanding, a full implementation of the original paper is available for review. Exploring this code can provide valuable insights into how the algorithm operates and its potential applications.

Read the full implementation and research details here: Target Propagation Paper

While target propagation may not have replaced backpropagation in mainstream machine learning, its innovative approach continues to inspire researchers exploring more interpretable and biologically aligned neural network training methods.

Post Comment