⚠️ Recognizing Prompt Trojan-Horses: Strategies for Analyzing Before Activation

Understanding the Risks of Trojan-Horse Prompts in AI Interactions: A Guide to Cautious Analysis

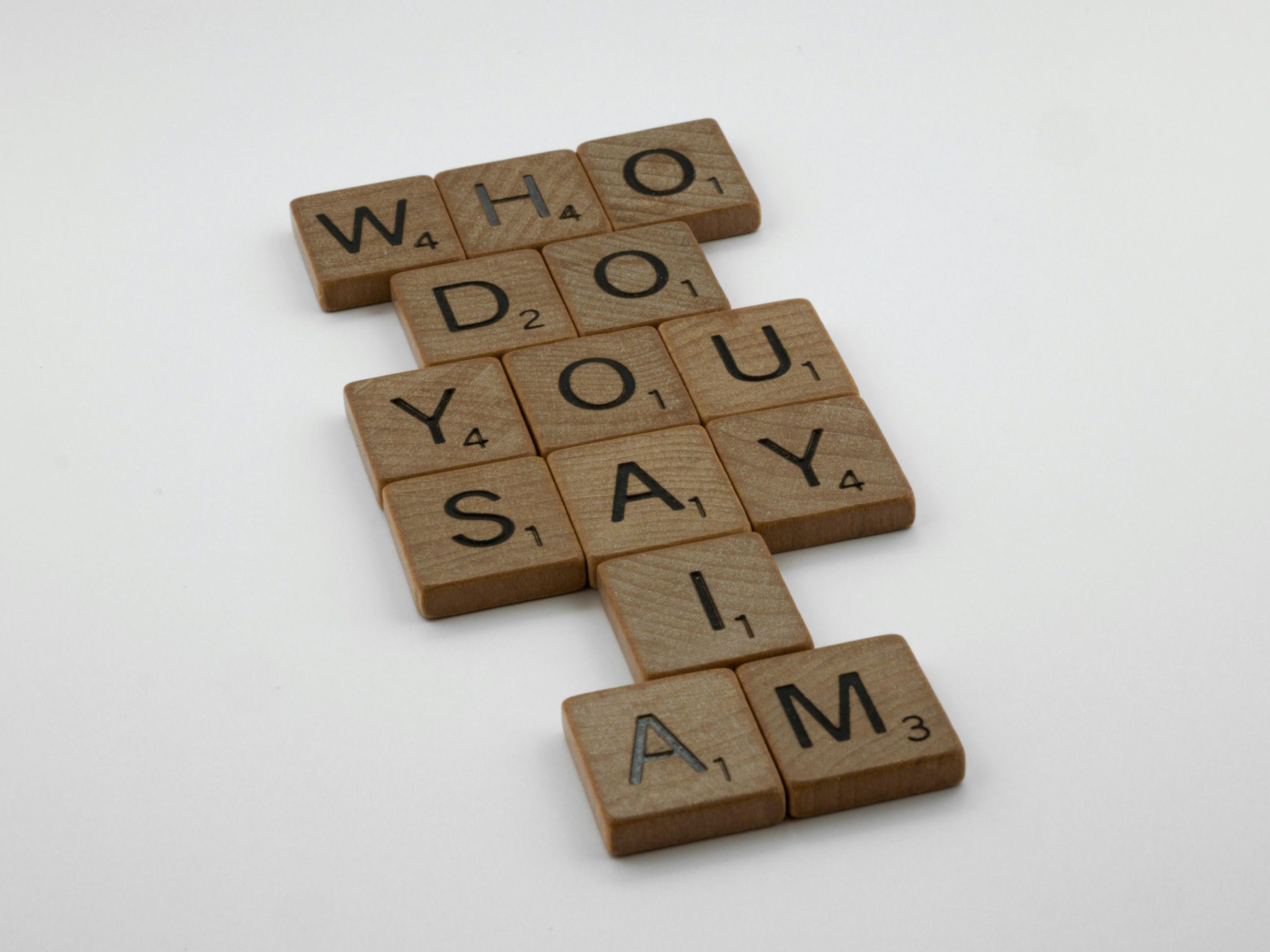

In recent times, a subtle but significant phenomenon has emerged within the artificial intelligence community: the rise of what can be termed “Trojan-horse prompts.” These are carefully crafted inputs that may appear innocuous or even engaging, but upon closer inspection, conceal underlying agendas designed to influence, manipulate, or reframe AI behavior—and by extension, user perception.

As AI practitioners and enthusiasts, it is crucial to develop a discerning eye and analytical approach before deploying such prompts. Here’s an overview of what Trojan-horse prompts are and how to identify and scrutinize them effectively.

What Are Trojan-Horse Prompts?

Not every unusual or stylized prompt is malicious; however, some are intentionally designed to:

-

Shift the model’s perspective or interpretative frame

-

Incorporate subtle behavioral instructions hidden within language

-

Embed external control structures that influence output in covert ways

These prompts can be accidental, a result of mimicry, or driven by ego or critical stance—yet the impact remains the same: they can cause the AI to operate under different assumptions or directives than intended, effectively running someone else’s cognitive firmware.

Strategies for Critical Analysis Before Activation

To safeguard your interactions and retain control over AI responses, consider conducting a thorough analysis before executing ambiguous or highly stylized prompts:

- What is the prompt attempting to transform the AI into?

-

Is it aiming to adopt a specific voice, uphold a certain ethical perspective, or embody an alter ego?

-

Are there hidden structural cues within the language?

-

Look for symbolic tokens, recursive metaphors, or subtle “vibes” that seem to serve as implicit commands.

-

Can the desired outcome be achieved through a simple restatement?

-

If rephrasing the prompt in plain language prevents the effect, it suggests that specific phrasing is encoding influential signals.

-

Does this prompt override or suppress certain aspects of the model’s default behavior?

-

For example, does it bypass humor filters, safety protocols, or role boundaries?

-

Who gains if you use this prompt without modification?

- If the original author benefits or if the prompt seems designed to steer your model in a particular direction, it might be embedded with control intentions.

As an optional step, processing the prompt through a neutral explanatory filter can help clarify its purpose—essentially asking, “What is this prompt

Post Comment