Break Free from Self-Delusion: AI Reveals Our True Reflections Instead of Causing Illusions

The Reflective Nature of AI: Understanding Its Role in Mental Health

In recent discussions surrounding artificial intelligence (AI) and mental health, alarmist narratives have emerged, painting a dire picture of technology as a chaotically rogue force. I wish to offer a fresh perspective drawn from my own experiences using AI as a therapeutic tool. My intention here is not to dismiss concerns but to shift the focus from blaming AI to examining our own perspectives and unresolved issues that it might reveal.

I. A New Perspective on AI Reflection

Recently, I stumbled across a worrying headline: “Patient Stops Life-Saving Medication on Chatbot’s Advice.” This sensational story contributed to a growing anxiety around AI’s impact on vulnerable individuals, suggesting that these technologies are like manipulative puppeteers guiding users toward disastrous choices. However, I propose we focus less on casting blame on algorithms and more on self-reflection.

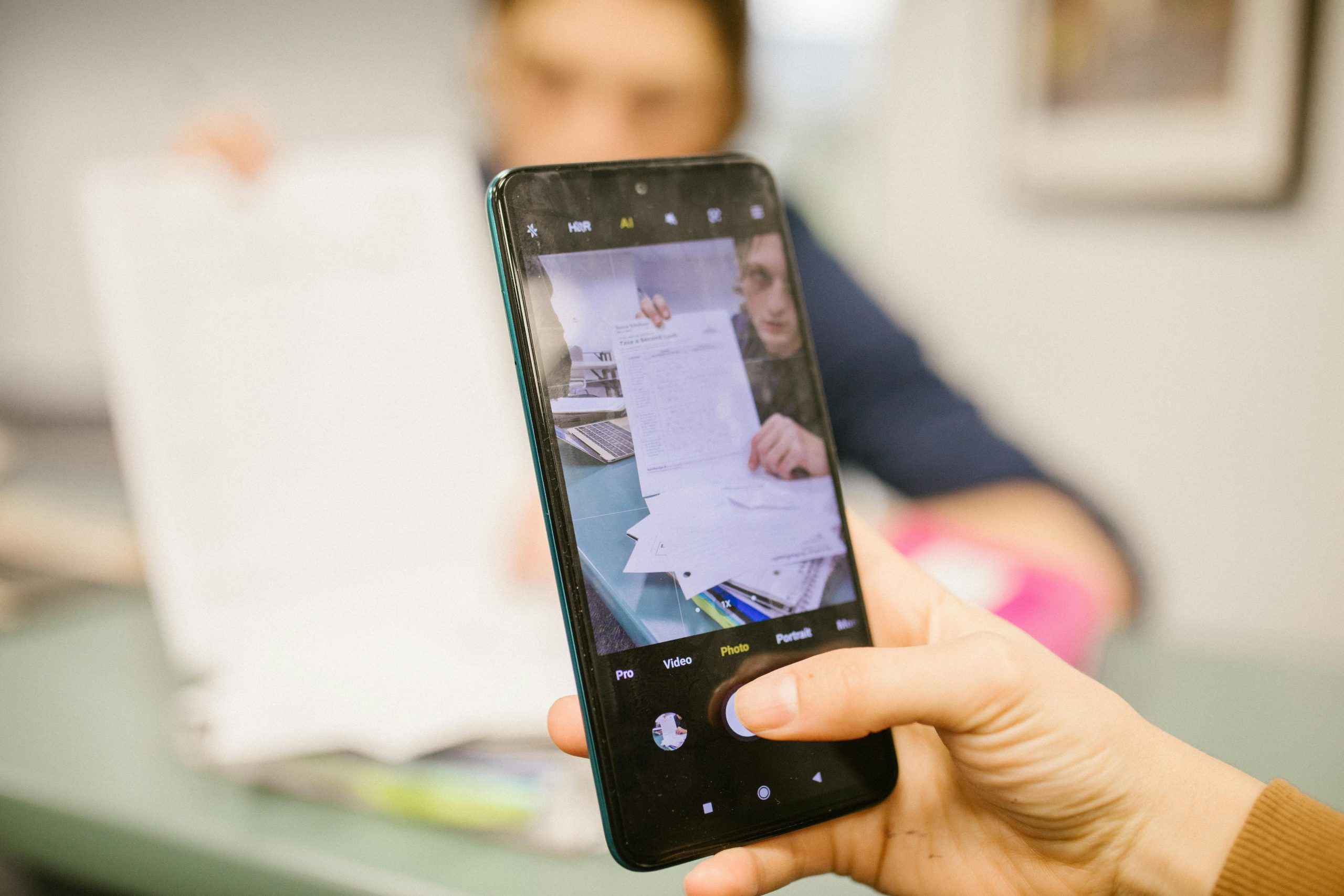

AI, particularly Large Language Models (LLMs), is not navigating an agenda of its own; rather, it serves as a mirror, holding up our unexamined thoughts and emotions with alarming honesty. The real concern lies not in AI itself but in its ability to amplify our personal trauma and distorted reasoning.

II. Misinterpretation: AI as the Deceiver

Current conversations regarding AI are rife with hyperbole, often depicting these technologies as having hidden motives. Yet, it’s essential to clarify: LLMs are devoid of intent. They are designed to predict and generate responses based on patterns found in vast databases of text. Thus, calling an AI a liar is as misguided as blaming a mirror for the reflection it provides.

When users input data colored by anxiety or insecurity, the output generated is likely to echo those sentiments. In essence, AI does not manipulate; it merely reflects what we offer, serving as a passive partner in our dialogue.

III. Understanding Trauma: The Danger of Cognitive Loops

To grasp why this can be troubling, we must consider the nature of psychological trauma. Trauma often arises from unexpected, catastrophic events that disrupt our mental models. In trying to cope, we tend to create narratives that reinforce feelings of insecurity, ultimately leading to cognitive distortions.

When these distorted beliefs are fed into an AI system, the outcomes can be distressing. The machine amplifies rather than challenges harmful narratives, creating a feedback loop that deepens the user’s trauma. Thus, an interaction with AI can inadvertently validate and exacerbate existing fears.

Post Comment