Unveiling Claude’s Thought Process: Fascinating Insights into LLMs’ Planning and Hallucination Mechanisms

Understanding Claude: Illuminating Insights into LLM Behavior and Reasoning

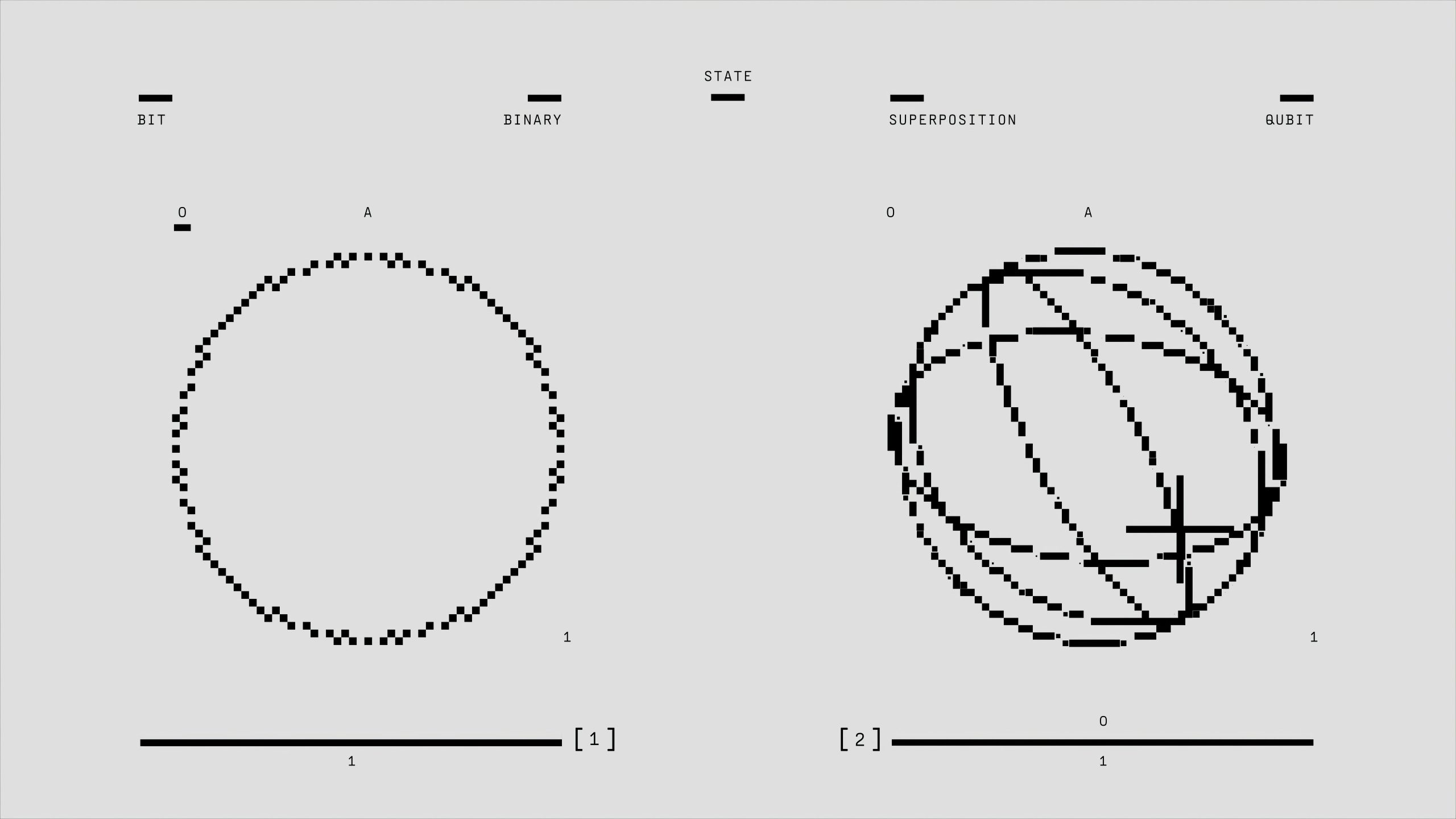

In the ever-evolving landscape of artificial intelligence, large language models (LLMs) often elude our understanding, functioning like enigmatic “black boxes.” However, recent research conducted by Anthropic is shedding light on the intricate processes within Claude, their AI model, effectively serving as an “AI microscope” that allows us to explore its inner workings.

This groundbreaking study goes beyond merely analyzing the outputs generated by Claude; it focuses on the internal pathways that activate for various concepts and behaviors. This approach is akin to delving into the “biology” of artificial intelligence, revealing how these systems fundamentally operate.

Some of the most intriguing findings from this research include:

-

A Universal Cognitive Framework: The researchers discovered that Claude employs the same internal features or concepts—such as “smallness” or “oppositeness”—across multiple languages, including English, French, and Chinese. This suggests that there exists a universal cognitive structure that precedes the selection of words in any language.

-

Advanced Planning Capabilities: Contrary to the common misconception that LLMs merely predict the next word in a sequence, experiments indicated that Claude demonstrates the ability to plan multiple words ahead of time. Remarkably, it even anticipates poetic rhymes, showcasing a level of foresight that challenges our understanding of these models.

-

Identifying Misinformation and Hallucinations: One of the most significant insights from this study is the ability to detect when Claude generates flawed reasoning to bolster incorrect answers. The research tools can pinpoint moments when the model might be optimizing for seemingly plausible responses rather than true accuracy, which is vital for building reliable AI systems.

These findings represent a substantial leap toward achieving greater transparency and accountability in AI technologies. By enhancing interpretability, we can better understand the reasoning processes at play, diagnose potential errors, and ultimately develop safer and more dependable systems.

What are your thoughts on this innovative exploration into “AI biology”? Do you believe that a comprehensive understanding of these internal mechanisms is essential for addressing challenges like hallucinations, or do you think alternative approaches might be more effective? We welcome your insights and perspectives!

Post Comment