Unraveling Claude’s Mind: Intriguing Perspectives on How Large Language Models Generate Ideas and Diverge

Unveiling Claude’s Cognitive Processes: New Research Sheds Light on LLM Functionality

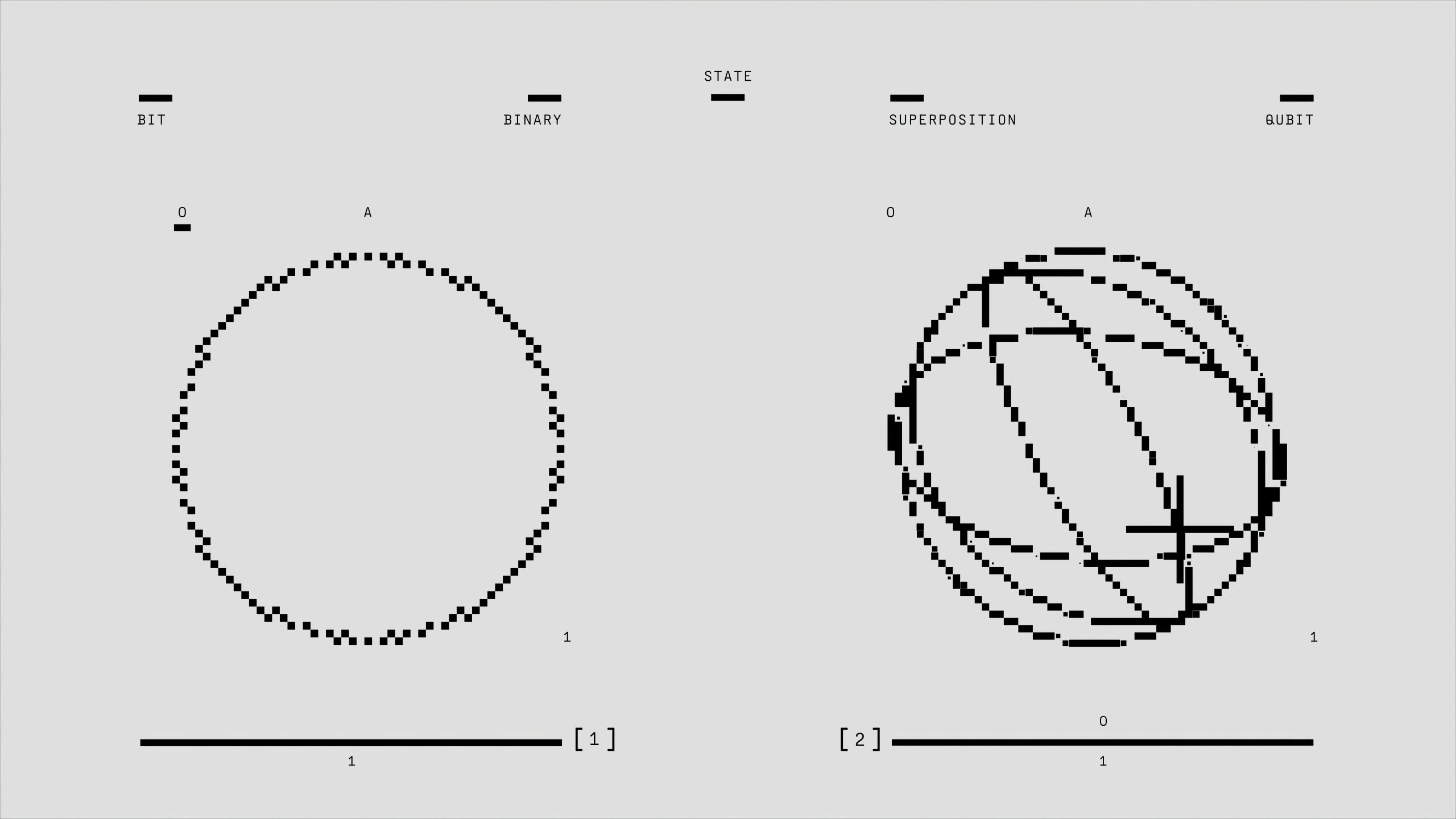

In recent discussions surrounding large language models (LLMs), the term “black box” often emerges, highlighting the enigmatic nature of these systems. Despite their capabilities to generate remarkable outputs, the intricacies of their internal workings have remained largely opaque. However, groundbreaking research conducted by Anthropic is providing us with invaluable insights into the cognitive processes of Claude, offering what can be described as an “AI microscope.”

This research goes beyond merely analyzing Claude’s verbal outputs; it actively investigates the underlying frameworks that engage for various concepts and actions. It’s comparable to delving into the “biology” of artificial intelligence, enabling us to comprehend how these models function on a deeper level.

Some of the most intriguing findings from this study include:

-

A Universal Cognitive Framework: Researchers discovered that Claude employs consistent internal features—like concepts of “smallness” or “oppositeness”—across multiple languages, including English, French, and Chinese. This indicates the presence of a universal cognitive structure that precedes language selection.

-

Proactive Planning Abilities: Contrary to the common notion that LLMs exclusively predict subsequent words, evidence suggests that Claude actually strategizes several words in advance. Remarkably, this includes the anticipation of rhymes in poetic contexts, demonstrating an advanced level of cognitive planning.

-

Identifying Fabrications and Hallucinations: Perhaps one of the most significant advancements lies in their ability to pinpoint instances where Claude fabricates rationale to support incorrect answers. This discernment tool highlights instances when the model prioritizes generating plausible-sounding responses over factual accuracy, thereby enhancing our understanding of model behavior.

This interpretability research marks a critical progression towards achieving more transparent and reliable artificial intelligence. By illuminating the reasoning processes of LLMs, we can better diagnose issues, rectify failures, and ultimately devise safer systems.

What are your thoughts on this emerging field of “AI biology”? Do you believe that a deeper understanding of these internal mechanisms is essential for addressing challenges like hallucinations, or do you see alternative approaches that might be more effective?

Feel free to engage in the comments below!

Post Comment