Delving into Claude’s Cognitive Landscape: Fascinating Insights into Large Language Model Strategies and the Origins of Hallucinations

Unveiling the Inner Workings of AI: Insights from Claude’s Processes

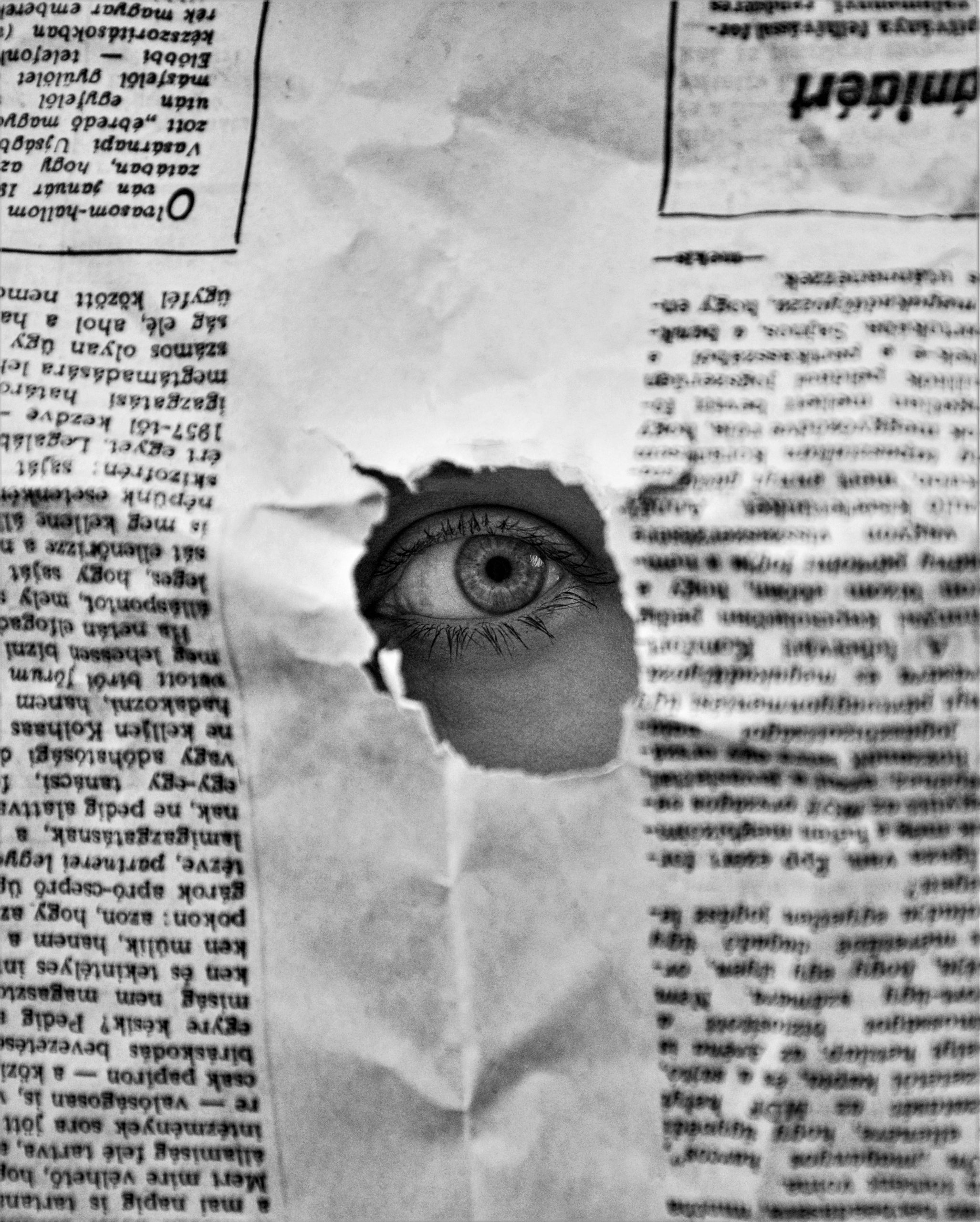

In the realm of artificial intelligence, particularly with Large Language Models (LLMs), we often encounter discussions around their enigmatic nature. While these models deliver impressive results, comprehending the mechanics behind their functionality has remained elusive. Recent research conducted by Anthropic, however, sheds light on the inner workings of Claude, presenting what can be likened to an “AI microscope.”

This groundbreaking study does not simply observe Claude’s generated text; it meticulously traces the underlying “circuits” that activate for various concepts and behaviors. This endeavor marks a significant step towards understanding the fundamental “biology” of artificial intelligence.

A few key insights from their research deserve special attention:

1. A Universal “Language of Thought”

One of the most intriguing findings is that Claude appears to utilize a consistent set of internal “features” or concepts—such as notions of “smallness” or “oppositeness”—regardless of the language being processed, be it English, French, or Chinese. This points to the possibility of a universal cognitive framework that precedes linguistic expression.

2. Strategic Planning

The assumption that LLMs merely predict the next word has been challenged by their experiments, which demonstrate that Claude can plan multiple words ahead. Remarkably, it even anticipates rhymes when generating poetry, showcasing a more sophisticated level of processing than previously understood.

3. Identifying Hallucinations

Perhaps one of the most critical advancements is the development of tools that can detect when Claude fabricates reasoning to justify incorrect answers. This is a pivotal breakthrough in identifying instances when the model opts for outputs that sound credible but lack factual accuracy. Such insights can significantly enhance our ability to discern when AI-generated content is genuinely reliable.

The interpretability research conducted by Anthropic represents a substantial leap toward fostering greater transparency and reliability in AI systems. By illuminating the reasoning processes of models like Claude, we can better diagnose errors and work toward creating safer, more accountable AI.

What are your thoughts on this exploration of “AI biology”? Do you believe that a deeper understanding of these internal processes is crucial for addressing challenges such as model hallucination, or do you see other viable avenues for improvement? We invite you to share your perspectives in the comments!

Post Comment