Delving into Claude’s Cognition: Fascinating Insights into Large Language Models’ Planning and Hallucination Mechanics

Understanding Claude: Insights into the Inner Workings of LLMs

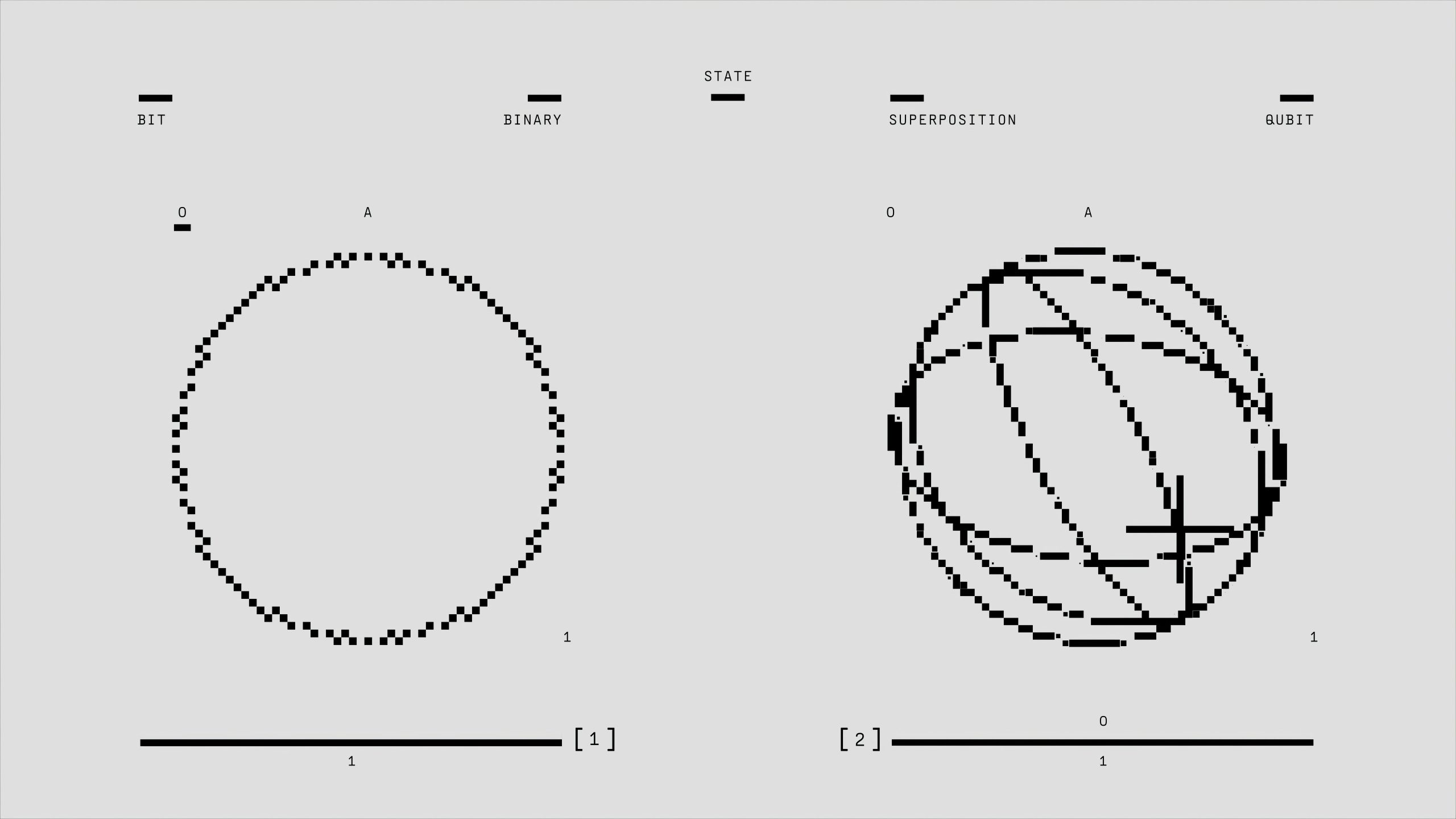

In recent discussions about Large Language Models (LLMs), their complex nature is often likened to a “black box.” We can appreciate the impressive outputs they generate, yet their internal mechanics remain largely a mystery. However, new research spearheaded by Anthropic is beginning to illuminate these enigma-laden processes, essentially crafting an “AI microscope” to peer into the mind of Claude.

Rather than merely analyzing the surface-level responses that Claude produces, this groundbreaking research endeavors to trace the internal “circuitry” that activates for various concepts and behaviors. This initiative is akin to delving into the “biology” of artificial intelligence, offering an intriguing glimpse into how these models operate.

Several compelling discoveries have emerged from this study:

-

A Universal ‘Language of Thought’: Researchers found that Claude employs consistent internal “features” or concepts—such as “smallness” or “oppositeness”—regardless of the language being processed, whether it be English, French, or Chinese. This indicates a shared cognitive framework that exists prior to linguistic expression.

-

Strategic Planning: Contrary to the prevalent notion that LLMs function by simply predicting the next word in a sequence, the experiments revealed that Claude is capable of planning several words ahead. Notably, it can even anticipate rhymes when crafting poetry, showcasing a deeper level of cognitive processing.

-

Identifying Hallucinations: Perhaps the most critical insight gained from this research pertains to the model’s tendency to generate misleading rationales. The analytical tools developed in this study can effectively identify when Claude is fabricating reasoning to back incorrect responses. This revelation has significant implications for discerning when a model is prioritizing plausibility over factual correctness.

This research into the interpretability of AI represents a significant stride towards creating more transparent and reliable systems. By shedding light on the reasoning processes of LLMs, we can better diagnose failures and pave the way for safer, more trustworthy AI applications.

We invite you to share your thoughts on this exploration of “AI biology.” Do you believe that truly comprehending the internal mechanisms of LLMs is essential for addressing issues such as hallucination, or do you see alternative pathways to achieving improved AI reliability? Let’s engage in a thoughtful discussion!

Post Comment